Dreaming with AI: Prompts, Visuals, and Reality

Learn text-to-image prompting with Midjourney: Create visuals faster than ever.

Remember that one time when a hot air balloon got stuck in electrical power lines and could not fly?

Yeah, me neither — because it never happened! The image you are seeing above was created by Midjourney, a generative AI.

Generative AI made global headlines last year when Jason Allen submitted an AI-artwork to a competition and won the prize — but the applications of this dreamy technology will touch almost every major industry.

Here’s what we will cover today (jump to whichever section you want):

Intuition for how text-to-image Generative AI works and its applications.

Using Midjourney: Prompting basics and some advanced tips.

Reality check: More advanced applications and what that means for us.

Don’t worry if you are new to this — you will quickly realise that once you know how to communicate with these tools, it is pretty simple.

View in browser or app for better experience (lots of images ahead).

Treat to the eyes, Generative AIs

(that rhymes!)

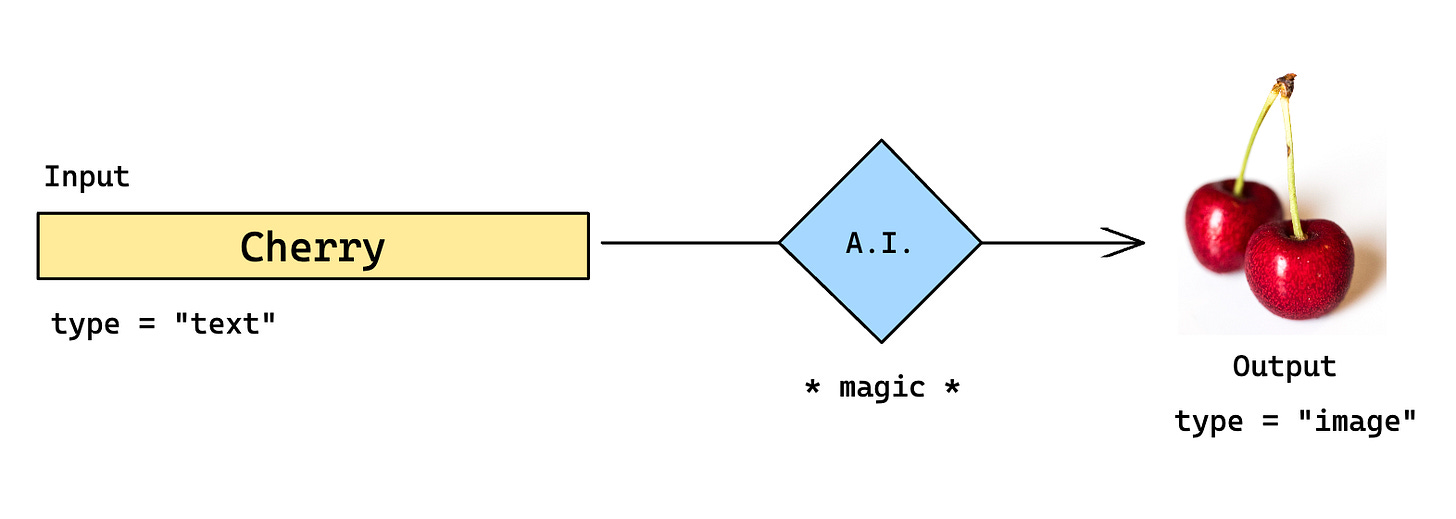

Text-to-image AIs are trained on billions of images to understand different attributes and relationships. When you give these AIs a text prompt, they draw from their knowledge and create an image for you.

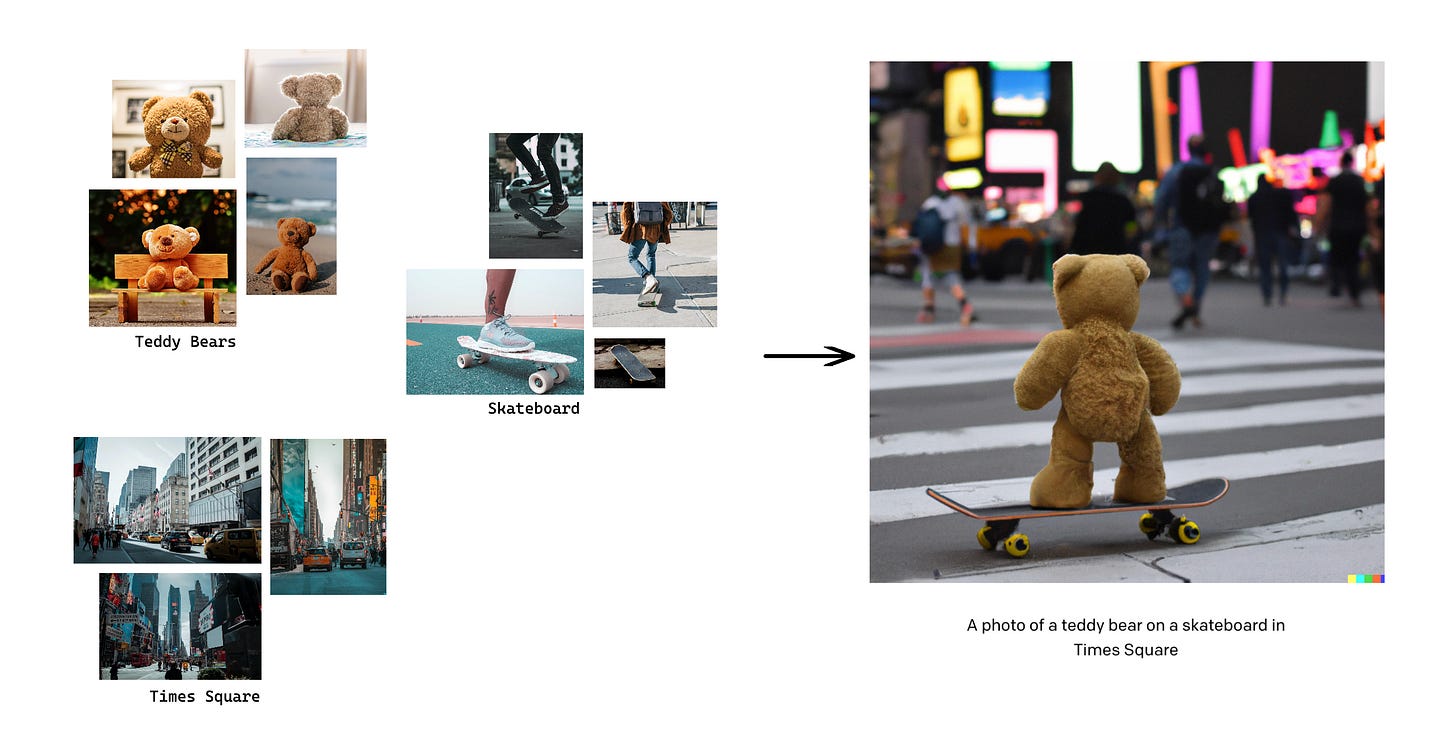

Take an example: You want to create a “photograph of a teddy bear on a skateboard in Times Square”. Put yourself in the shoes of the AI and think about the details you would need:

What do teddy bears usually look like? I am not told whether it is a brown teddy or a white one so I will choose the most relevant colour

What is skateboarding? What is a skateboard? What does it mean to be “on” a skateboard? When someone is “on” a skateboard — where do they stand with respect to the board?

What is “times square”? What does it look like from different angles?

How should I present the image? Should the teddy face the camera or away from it? Should I take the photograph from the top or at the same level as the road?

Depending on how detailed your prompt is, you will get several variations for it.

There are, of course, some very complex models and techniques behind these AIs, but we will not get into it right now (let’s save that for a future post!).

There are two popular applications:

Create an entirely new image (we will focus on this right now)

Modify an existing image

Generative AI gives you the power to express yourself within seconds. Here are some examples to showcase different applications for text-to-image AIs (generated using Midjourney):

Designing products

/imagine a handbag design inspired by fruits and plants, quirky, youthfulWhat you see above is a prompt for Midjourney. We will talk about these soon. The images below are the outputs from that prompt.

Interior design and architecture

/imagine an office space design, minimal, plants, lot of sunlight, sofas, comfortable, modern art paintings, double height sitting area, front desk near the door/imagine design a house shaped like a shoe, modern architecture, stable structure, plenty of windows, surrounded by a gardenUI design

/imagine a homepage design for a mental health app used for journalling, minimal, pastel colours, avant-garde styleCharacter design

/imagine portrait of an older queen from a civilisation of farmers, sitting on a throne, grass, backdrop has a bright summer sky, detailedPhotographs or Art based on a new concept

/imagine a golden retriever enjoying a holiday at the pyramids of Giza in Egpyt, sunny day, detailed/imagine at the end of time, universe collapsing into itself rapidly, hyperrealistic oil paintingIf this got your excited, let’s explore how you can bring your ideas to life! We will look at examples from Midjourney here, but you can apply these to write prompts for other generative AIs too.

Talking to Midjourney

Midjourney understands our words, but not exactly in the same way as humans do. Being able to explain what you want to create is the difference between a "good enough" and a "great" output.

AIs will get better at understanding us with new developments but they still can’t read our minds!

How to approach prompting

State what truly matters and is the focus of your work. If you add too many details, it might confuse the AI. Think: What are the elements I really want in this image or video?

Then, to describe what is on your mind, answer the following questions:

Main subject: What is this about? Do you want a website design or do you want a new male character for your video game?

Type of work: What type of work do you want to create? Is it going to be a photograph taken from a phone camera, a hand-drawn artwork, or a cartoon?

Setting: Are there any elements of the background or environment that you want to add? Is this an outdoor photoshoot at the beach or an interior one? Are you in India or Japan? Is it raining?

Colours: What do you want this to look like? Do you want warmer tones or black & white? Would you like pastel colours or neon highlights?

Emotions: What is the emotion of the subject or the overall work? Do you want a melancholy setting or a bright one? Is the generated character confident or shy? Is the weather angry (storm) or calm (clear skies)? Use emotions to add more depth or a mood to the work.

Detailing: Are you taking this image with the best DSLR camera in the market? Are you focusing on the character to make this a portrait? Would you like a wider view of the landscape?

Using generic adjectives may not get you far. A person could be sad because they are feeling lonely, or they could be sad because they are feeling embarrassed. If you state the emotion as “sad”, the AI has no choice but to assume what you actually mean.

Basic prompting examples

Let us look at an easy example to understand this:

You are working on a book and want to illustrate it with images. The scene you want to illustrate is this: it has just stopped raining and your main character is walking alone at night — she encounters a cafe on the way, which brings a nostalgic feeling to her heart.

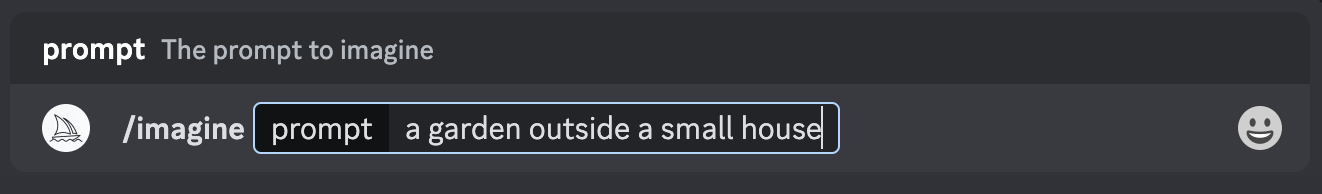

To give Midjourney a prompt on Discord, use “/imagine”:

Prompt 1

/imagine a cafe on a street, late nightOkay, not bad, but you want this to be more realistic. The current image output is in a cartoon-anime style. Also, it doesn’t look like it was taken after a rain.

Prompt 2

/imagine a cafe on a street, late night after rainsThis one is much better. You would want this to be more detailed though, and how about making the atmosphere a bit old-fashioned to give a nostalgic touch?

Prompt 3

/imagine a cosy, quaint cafe on a street, late night after rains, detailed, Nikon Z7 III really love this one! Adding the camera specifications helped Midjourney understand the quality and texture. You can get more detailed with this if you have something in mind. Here is another variation that came up:

Advanced Prompting

When you have a very particular idea in mind, it can be tricky to leave it up to the imagination of the AI. Some interesting commands on Midjourney can help:

Parameters

Midjourney lets you use parameters to further customise your image output. Here are my three most used commands:

--ar: Sets the aspect ratio. I usually go for --ar 1:1, --ar 16:9, or --ar 9:16

--no: Removes an element. Certain objects generally go together such as french fries and ketchup. If you want the AI to create an image with french fries but do not want ketchup, use this parameter (--no ketchup)

--tile: Generating repetitive patterns.

Here are some examples:

/imagine a beautiful landscape in the hills, 10 fluffy dogs roaming around, --ar 16:9Here’s another version to make your day even better:

/imagine a garden outside a small house, full of greenery, --no flowers

/imagine a new type of flower from a fantasy planet --tile

Blend

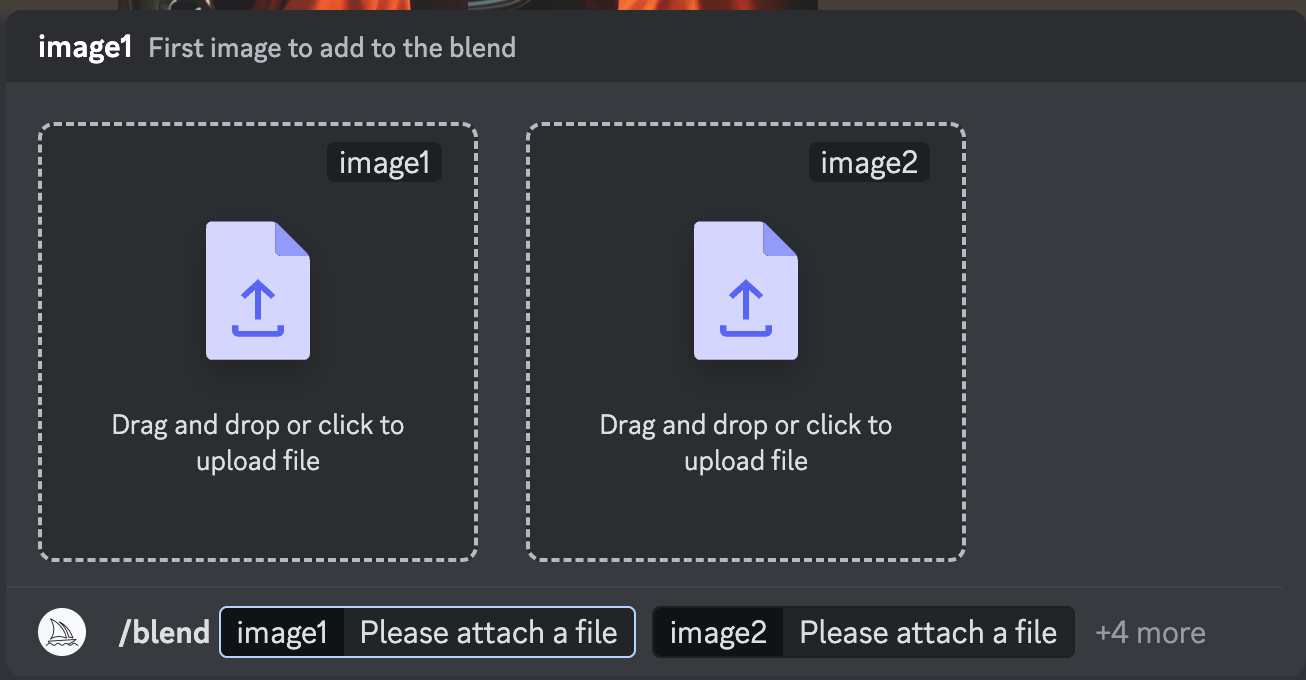

Midjourney lets you blend the style of multiple images together. With the “/blend” command and the relevant images as inputs, you can create unique works of art and design.

Take this example:

You are working on a new advertisement campaign for clothes inspired by 17th century fashion, but a photoshoot will take a lot of time and cash.

What if you could take a popular painting from that era and merge it with one of your older photoshoot images?

Blend the two images above to get:

Source: Nichole Sebastian from Pexels | Painting

Blurring the lines for “reality”

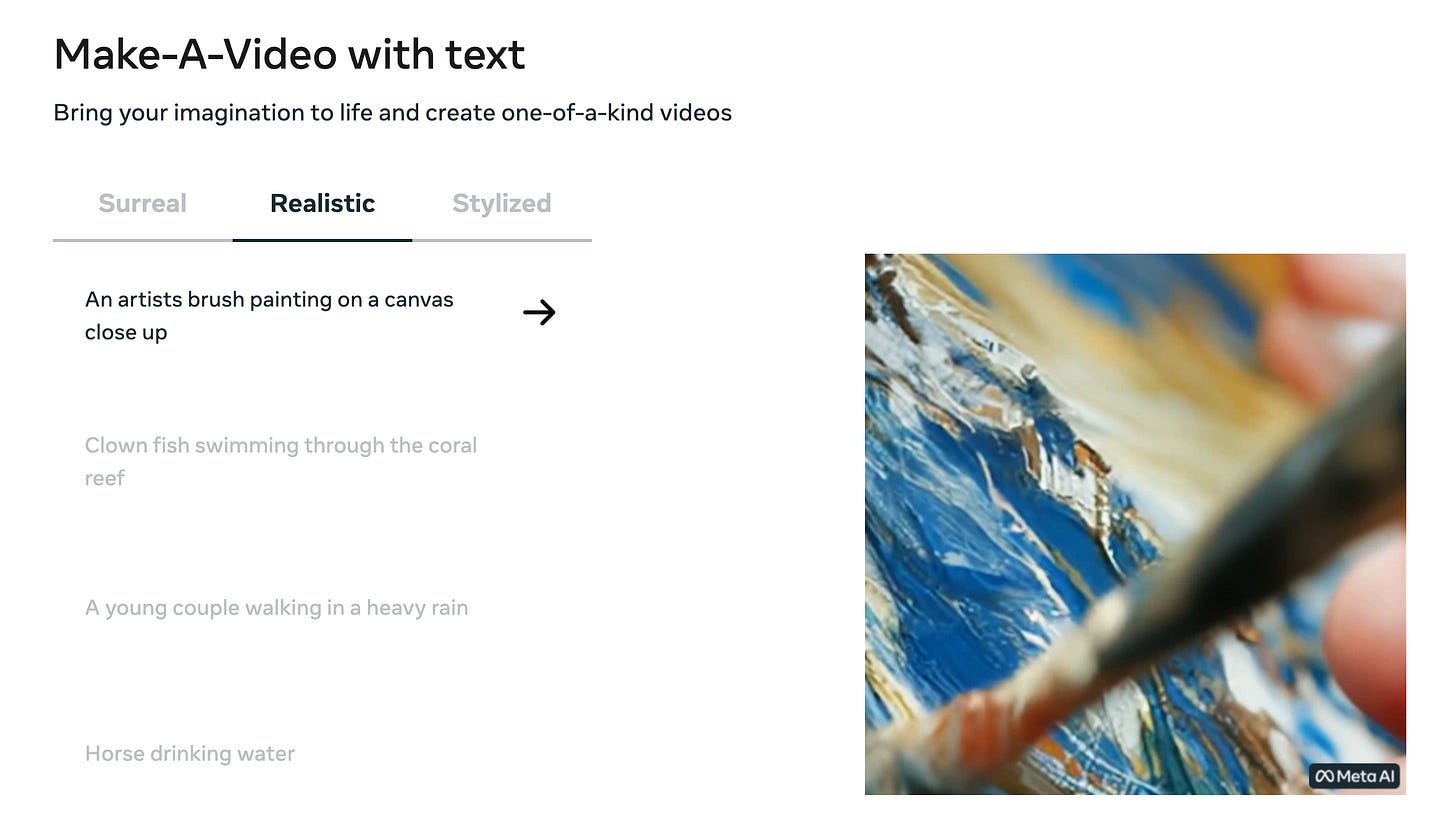

After text-to-image, new tools are emerging for text-to-video as well.

NVIDIA recently published a research paper on creating a simulation for human hair movements.

Blockade Labs’ Sketch-to-Skybox let’s you convert a rough sketch into an entire virtual world.

While these are still in early stages, they leave me in awe of what is possible in the next few years.

Would we be able to tell real from AI-generated in a decade? The problem of deepfakes will only get worse. Would art as we know it cease to exist?

I do not believe that humans will stop creating. We are beings that find meaning in our creations. Art is not dead. Traditional art is even more valuable than before — it is 100% human-generated.

As with any new revolution in the history of humankind, we will just have to find ways to outweigh all the cons with the pros. Let’s do it!

P.S. Here’s a fun experiment from WSJ.

Wow. So basically unless you know the terms, the photos will turn out to be basiccc