The Ultimate AI Glossary

Struggling to keep up with the discourse on AI? Let's make it easy for you. Glossary updates every month.

The Ultimate AI Glossary can be used as a crash course in understanding all the common terms you will come across.

This glossary is carefully designed to be understood by folks without any prior background in Artificial Intelligence or Machine Learning.👋 Examples used are very easy to understand and quite relatable.

⏰ Reading the entire list will take you only 80-120 minutes (120+ terms).

🔄 This list updates every month to include any new words or concepts.

📖 Please read in browser (here) if the complete list does not show in email.

Pick a section you like or simply search for what you want in the browser. Terms are arranged alphabetically under the following sections:

If you find any typos or errors, let me know. Editing this huge glossary was more intense than I had expected! I am not an AI/ML researcher but I have been building AI/ML products and projects since 2016.

Let’s go!

☀️ General

Artificial General Intelligence (AGI)

AGI is the idea of creating a machine that can think, learn, understand, and apply knowledge across various domains — just like a human being. In more human terms, AGI is expected to have a good level of creativity and common sense.

Examples:

Remember "WALL-E", the adorable robot who learns, adapts, and even develops emotions? That's a taste of AGI for you!

Ava from “Ex Machina” is another example of AGI. She shows emotion, interests or desires (to see the world), and the capability to learn and adapt (finds a means of escape and manipulates those around her).

Artificial Intelligence (AI)

Artificial Intelligence (AI) is intelligence created in the image of human intelligence. AIs are capable of performing tasks that usually require human levels of intelligence.

AGI can adapt and learn to perform a variety of tasks while AI is more suited to perform a specific task.

Examples:

Siri and Alexa are AI-powered voice assistants that help you do things like set alarms, check the weather, or tell you lame jokes (comedy is not their star attribute).

Google Translate uses AI to convert text from one language to another.

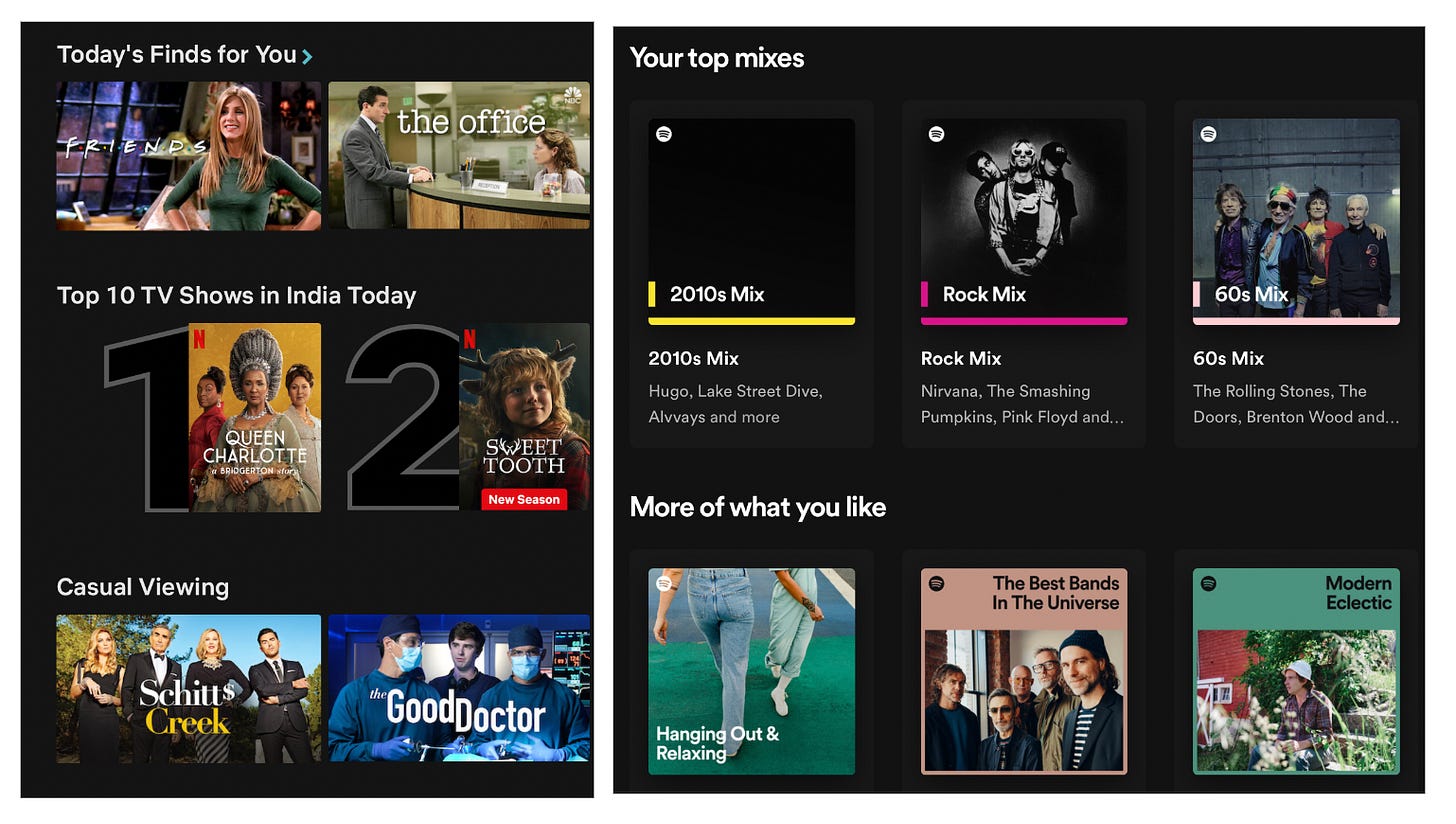

Netflix uses AI to suggest movies and TV shows based on your viewing history, ensuring you never run out of things to binge-watch.

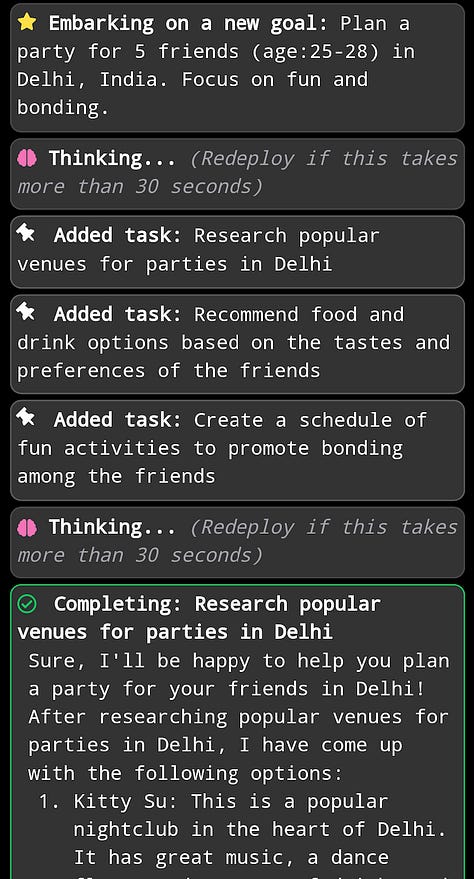

Artificial Intelligence Agent (AI Agent)

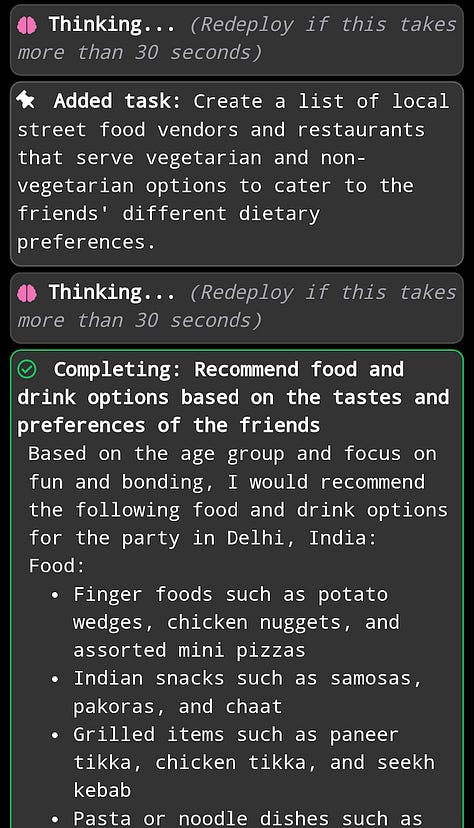

Let’s say you have an assistant. You can give this assistant any goal and they can figure out how to complete it for you. AI Agents can be thought os as such assistant AIs. They use AI and ML to process information, interact with their environment, and perform tasks without human intervention, like helpful little robots that don't need constant supervision.

Examples:

Customer support: AI agents can handle simple inquiries and resolve them on their own, such as checking the status of the user’s order and informing them when they ask for it or completing a refund.

Finance: AI agents can analyse market trends and manage investments.

Gaming: AI agents can work as realistic and challenging opponents that adapt their strategies based on your moves and learn as the game progresses.

Automated Machine Learning (AutoML)

Let us assume that you have never used machine learning before and do not have a background in computer science or data science. You have to create a model that recommends products to your customers. Imagine if someone could help you set up your datasets, clean the data, train suitable models on it, optmises for the right algorithm, and get the ML model deployed. AutoML does exactly that.

Examples:

Google's AutoML Vision helps businesses automatically identify and categorise images, like sorting pictures of cats from pictures of dogs.

A small business could use AutoML to analyse social media data and optimise their marketing strategy without having to hire a data scientist.

Autonomous

Autonomous refers to something that can operate independently (without human intervention). Autonomous AI can do your chores without you having to tell them what to do all the time.

Examples:

Self-driving cars are a prime example of autonomous technology on the road.

Robotic vacuum cleaners, like the Roomba, are autonomous helpers that keep our floors clean.

Bias

Bias in AI is like that one friend who always picks the same pizza, even though there's a whole menu to choose from. It is the presence of unintentional but inaccurate assumptions in AI and ML systems.

Bias can come from the data used to train these systems like a preference for cat videos over dog videos (though who can blame them?), or from the algorithms themselves. It can lead to skewed or discriminatory results.

Examples:

An AI-powered hiring tool might inadvertently favour male candidates if it was trained on data where male applicants were more successful.

A facial recognition system might struggle with identifying certain ethnicities if its training data lacked diversity.

An AI-driven medical diagnostic tool could be biased towards specific age groups.

Bias Mitigation

Bias mitigation is the process of identifying and reducing biases, making sure that the AI is fair, accurate, and inclusive. Imagine giving your pizza-obsessed friend a gentle nudge to try something new from the menu.

Examples:

In AI-powered hiring tools, bias mitigation might involve reevaluating the training data to include a diverse range of applicants.

For facial recognition systems, bias mitigation could include gathering more diverse and representative data from all ethnicities and genders.

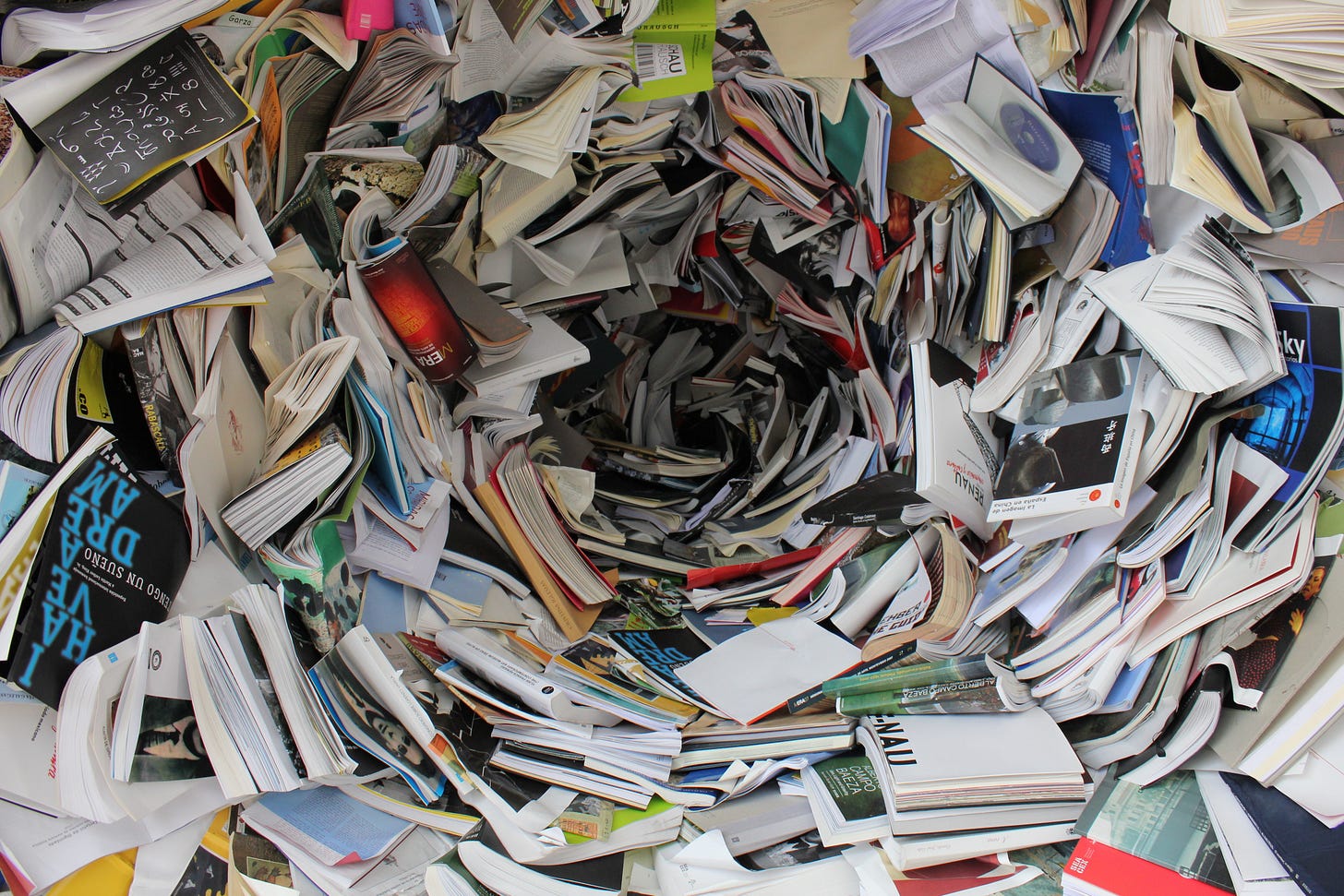

Big Data

Imagine you are working for Instagram and you have to find a marketing strategy for college students.

Well, you are dealing with Big Data. Often described as data that is very large in volume and grows every minute, Big Data cannot be processed with your traditional spreadsheets. In our example, 500 mn users globally doing all sorts of such as clicks, scroll, like, and coming from different sources such as Instagram web or app are generating Big Data.

Big Data can come from various sources, such as social media, smartphones, and even your smart speaker. It can analysed to reveal patterns, trends, and insights that help you make better decisions, improve public services, and maybe even predict the next big fashion trend!

Examples:

Retailers like Amazon analyse Big Data to understand customer preferences and recommend products, ensuring your shopping cart is never empty.

Healthcare organisations can use Big Data to track the spread of diseases and develop better treatment plans.

Traffic management systems can analyse Big Data from GPS devices, traffic cameras, and social media to optimise traffic flow, making your daily commute a little less soul-crushing.

Chatbots

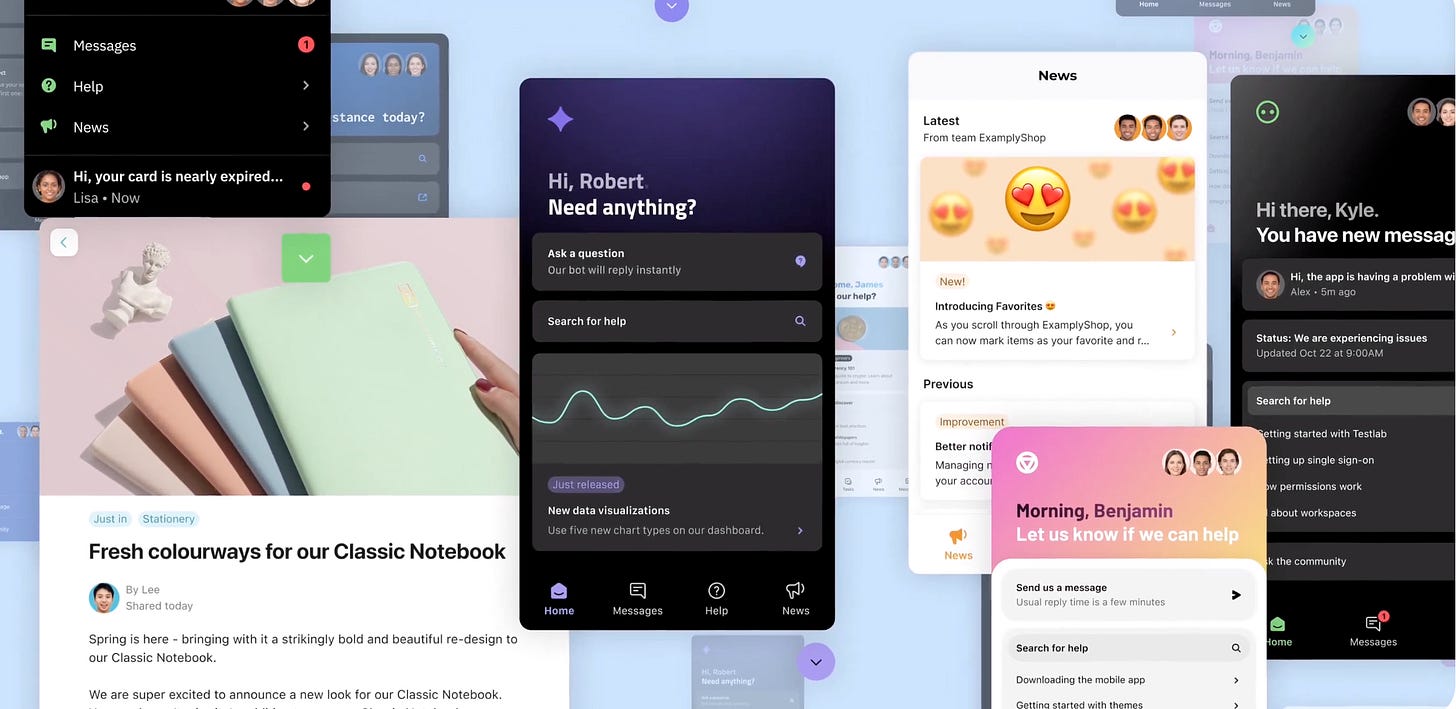

Chatbots are AI-powered virtual assistants that can communicate with humans through text or voice. Chatbots can help with tasks like customer support, making reservations, or just telling you a joke when you need a pick-me-up. They can be trained to perform specific tasks.

Examples:

Many businesses use chatbots on their websites to provide instant customer support, making sure you don't have to wait on hold for hours.

The Domino's Pizza chatbot allows you to order your favorite pizza without even having to talk to a human, ensuring your pizza cravings are satisfied ASAP.

Mental health chatbots, like Woebot, offer support for people dealing with anxiety or depression, providing a friendly ear when you need it most.

Computer Vision

When you see the world, you process a large amount of information including different objects and movement. We understand our world with our eyes.

Machines don’t see the world in the same way. A computer processing images as “pixels” will not be able to “understand” the image like we do. Computer Vision is a field of AI that enables machines to interpret and understand visual information from the world, allowing it to recognise objects, analyse images, and even read your handwriting (even if it looks like a doctor's prescription).

Examples:

Google Photos uses computer vision to recognise people, objects, and locations in your pictures, making it easy to find all the embarrassing photos of your best friend.

Self-driving cars use computer vision to "see" the road, other vehicles, and pedestrians, ensuring a safe and smooth ride.

Pokémon GO uses computer vision with augmented reality technology to overlay virtual Pokémon characters onto the real world so we can "see" and interact with them.

Conversational AI

Conversational AI powers the technology behind AI-driven chatbots and voice assistants, allowing them to understand and respond to human language. Uses natural language processing, understanding, dialog design, and speech generation, conversational AI can make meaningful conversations with users and help them accomplish tasks. “Conversations” are at the centre of conversational AI, making you feel as if you are talking to another human being.

Example: Voice assistants such as Amazon's Alexa or Apple's Siri use conversational AI provide helpful answers or carry out tasks.

Data Augmentation

Imagine if your new job included identifying different types of flowers found in a certain city. If you only saw one type of rose and one type of lily, you may not be able to identify if a new type of flower. Moreover, there could be different types of roses and if the shape is somewhat different, it may throw you off.

Machine learning models suffer from the same problem, but at a much larger scale. These models do not possess the advanced pattern-matching and extrapolation capabilities of a human mind. To train them, we need a large amount of data.

Data Augmentation works by using the existing data and changing it just slightly to generate more data on which the model can be trained. Data augmentation helps AI systems become more robust and accurate, ensuring they don't get confused when faced with something new or unexpected. It's like teaching your dog to fetch in different weather conditions, so it doesn't get baffled by a little rain.

Examples:

In facial recognition systems, data augmentation can be used to create new images of faces with different angles, lighting, and expressions, ensuring the AI can recognize you even on a bad hair day.

For an AI system that diagnoses diseases based on images or medical scans, data augmentation can modify images to have varying levels of noise and distortion, making it more accurate in real-world conditions.

For self-driving cars, data augmentation can create new images of road signs and objects from different perspectives.

Data Mining

Data mining is the process of finding hidden gems after sifting through heaps of information. Large datasets are analysed to discover patterns, trends, and relationships to help businesses solve problems.

Data mining combines statistics, machine learning, and database technology, making it the ultimate tool for turning raw data into valuable insights.

Data mining can be considered a subset of data science in a way.

Examples:

Retailers can use data mining to analyse customer purchase histories and create personalised marketing campaigns — that is how you are always able to find coupons for your favourite snacks.

Financial institutions can mine data to mark potential cases of fraud or unusual spending patterns, keeping your hard-earned money safe.

Sports teams can use data mining to analyse player performance that helps in modifying their strategy and game plan, giving them an edge on the field.

Data Science

What happens when mathematics, statistics, big data, and computer science go on lunch together? Data Science! It is a field of research that deals with all types of raw data and applies complex algorithms to capture insights and patterns.

Examples:

Netflix uses data science to analyse user viewing habits and create personalised recommendations.

Political campaigns can employ data science to analyse voter preferences and tailor their messaging, helping them win hearts, minds, and eventually — votes.

Dataset

As the name suggested, a dataset s a collection of different types of information. Datasets are the foundation for building accurate and effective AI models because all models are trained and improved based on datasets.

Examples:

ImageNet is a dataset containing millions of images and their corresponding labels (the label for an orange will be “orange” so that the machine knows this is what an orange looks like), used to train AI models for object recognition.

The MNIST dataset is a popular collection of handwritten digits used to train AI models for recognising handwritten numbers.

Sentiment analysis AI models often use datasets of movie reviews, tweets, or product reviews, labeled with their sentiment or emotion (positive, negative, or neutral).

Deep Learning (DL)

Deep Learning teaches a machine to perform tasks by mimicking the way our brains work. Using artificial neural networks to model complex patterns and make decisions, deep learning allows AI systems to tackle problems that were once thought to be too complicated for computers, such as recognising people in videos or translating ancient texts.

Examples:

Deep learning algorithms are used in image recognition systems to automatically tag and organise your photos into different categories (selfies, group, photos of or with a certain person, photos taken in restaurants or parks, and so on).

Google Translate uses deep learning to convert text from one language to another.

Examples

Let’s say you are an event organiser and your client is throwing a surprise birthday party for her sister. She tells you, “Well, my sister has an eccentric sense of style. She will like decor that is more unique.” You will probably follow-up with, “Sure, but can you show me some examples?” “Unique” could mean anything in this context.

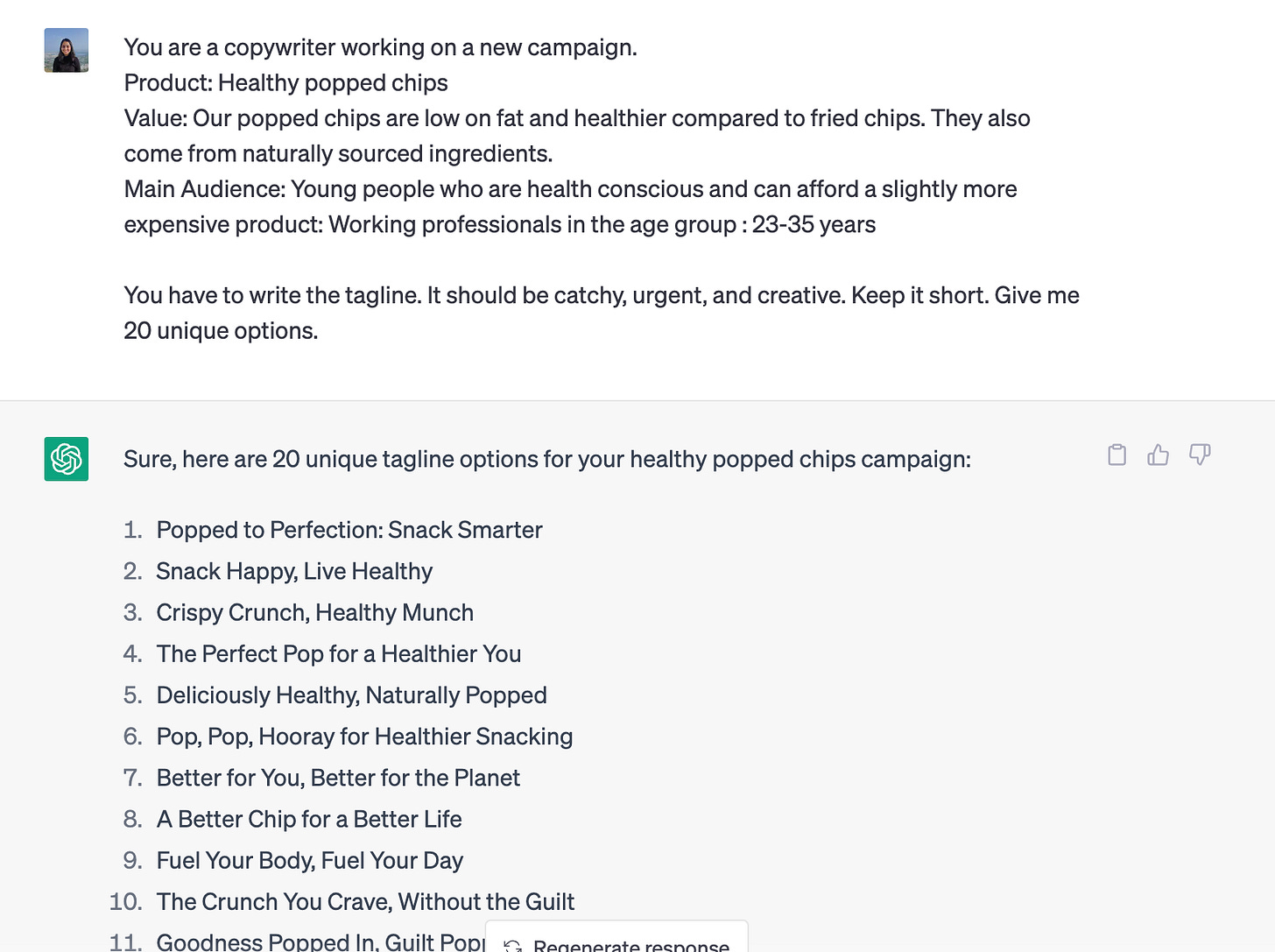

Examples help the AI understand what you are looking for. If you want the AI to work as a customer care chatbot and you want it to sound “friendly” and “professional” while greeting someone, it will help to show a few examples such as “Hello, nice to meet you! How can I help you today?”

This is especially helpful in prompt engineering to tune your output in the format you require.

Fairness

Fairness in AI relates is the absence of “bias”, making sure that the AI systems treat everyone equally, without discrimination or preference.

Examples:

A fair AI-powered loan approval system treats applicants from different socioeconomic backgrounds equally so that everyone has a fair chance at financial success.

A fair AI-driven college admissions tool would give equal consideration to students from all ethnicities and backgrounds, promoting inclusion.

Features

Features in Machine Learning are the characteristics of the data you are trying to analyse. Features are measurable properties. Think of features as the ingredients in your dish.

Feature Selection

Selecting the right features (Feature Selection) and discarding the unnecessary ones is important when it comes to making accurate predictions with the model. For example, if you are trying to understand whether it will rain tomorrow, and you exclude data on humidity, then your model will not be accurate. Similarly, if you try to use data on how many people were cooking noodles that day, it will not be relevant.

Examples:

In a machine learning model that predicts house prices, features could include the number of bedrooms, area of the plot, and neighbourhood.

For an AI system that recognises emotions in facial expressions, features can include the the curvature of the mouth and the shape of the eyes.

In a spam email detection model, features could be specific keywords, sender's email address, and number of recipients.

Feedback Loops

Let’s imagine that you are building a recommendation engine for YouTube. You have just trained, tested, and launched the model. As it rolls out, you notice an increase in engagement with recommended videos. You cannot stop here! You need to know how well the model is performing with different audience segments and content types.

The data you receive from testing the model with users is “feedback”. If you feed this back to the model (which recommended videos did well and which ones did not), it can train on that data to improve further.

Acting as constructive criticism, feedback helps AI systems become more accurate and effective over time.

Another example: A user rating system for AI, such as an upvote or downvote to the responses in intelligent chatbots, provides feedback that helps improve the quality and relevance of the generated text.

Fine-tuning

Fine-tuning refers to refining an existing AI model by giving it new data and parameters to train on. It helps AI systems adapt to new tasks or domains.

Let’s say you have a model that can identify different types of trees based on their images. Now, you want to use this model to identify trees in Thailand. Thailand will have a certain ecosystem and a variety of treed from the same species. To improve the performance of the model so that it identifies most trees correctly in Thailand, you will give it more data (fine-tuning) with images of trees in Thailand.

Examples:

Fine-tuning a pre-trained general image recognition system on a new dataset of medical images can help it identify diseases and abnormalities more accurately.

Fine-tuning an AI-powered recommendation system with new examples from user feedback can help it generate more relevant suggestions.

Generative AI

Generative AI is the creative genius of the artificial intelligence world, capable of dreaming up new and original content. Generative AI today has been trained on existing data in the form of images, text, music, and other media types.

Examples:

OpenAI's GPT-3 is a generative AI that can create human-like text, write poetry, and even generate code.

Midjourney is a generative AI that can create hyperrealistic or fantastical images of people, animals, or objects.

OpenAI's MuseNet can compose original musical melodies and harmonies.

GPT (Generative Pre-trained Transformer)

GPT is a language model developed by OpenAI. It's designed to understand our natural language and generate human-like text (GPT-4 is multi-model and is not limited to text) based on what we ask it to do.

Generative = Generative AI

Pre-trained = Model has already been trained on a large amount of data

Transformer = A type of neural network

Examples:

GPT can be used to create a chatbot that helps users troubleshoot issues with their gadgets or answer questions about their favourite TV shows.

Content creators can utilise GPT to help brainstorm ideas for tweets.

Hallucination

When your AI model hallucinates, it dreams up inaccurate or irrelevant outputs. Think of hallucinations are glitches in the AI's thought process — they often highlight the limitations and imperfections in these models.

Examples:

A text-generating AI that produces a bizarre and nonsensical story when given a simple writing prompt, like a fever dream of words and sentences.

An image-generating AI that creates a picture of a six-legged dog or a two-headed bird, confusing reality with fantasy.

A text-generating AI telling you that the Coronavirus pandemic happened in 2017.

Instructions (LLM)

Instructions in the context of Large Language Models (LLMs) such as GPT-3 are are the specific tasks, prompts, or queries given to the AI model, helping it understand what's expected and generate appropriate responses.

Examples:

Giving step-by-step instructions to an AI assistant, such as asking it to find the best restaurants in a specific city and sort them by user ratings.

Asking an AI model to create a workout routine based on your requirements.

Intent and Entities

"Intent" and "entities" are terms often used to describe how machines understand human language. Intent refers to the purpose or goal behind a person's words and it if often an action that the machine has to take. Entities are the specific details that help clarify the intent.

Examples:

When you ask Siri, "Find me a coffee shop nearby," the intent is "find a coffee shop" while the entity is "nearby."

In a chatbot conversation, when someone types, "I want to order a large pepperoni pizza," the intent is "order pizza," and the entities are "large" and "pepperoni." The entities describe the pizza.

If you ask Google Assistant to "Play the latest episode of Last Week Tonight," the intent is "play episode," and the entity is "Last Week Tonight."

Internet of Things (IoT)

Imagine a world where your fridge can gossip with your toaster about how you let the bread go stale every week, and your doorbell's best friend is your electric bulb because it always tells the bulb when to turn on before someone enters the house – that's the Internet of Things (IoT) for you!

IoT is a network of interconnected devices that can communicate with each other and share data — big, happy family of gadgets making our lives more convenient.

Examples:

A fitness tracker like Fitbit counts your steps, monitors your heart rate, and shares the data with your smartphone to help you reach your fitness goals.

In agriculture, IoT sensors can monitor soil moisture, helping farmers optimise irrigation and conserve water by automatically turning watering systems on and off accordingly.

Knowledge

In the realm of artificial intelligence (AI) and machine learning (ML), "knowledge" refers to the information a system has learned or has been trained on.

Examples:

IBM's Watson AI is trained on a vast array of topics, allowing it to answer questions on anything from history to pop culture.

In medical research, AI systems are trained on vast amounts of data from scientific articles to help discover potential new treatments for diseases.

Labels

Labels in AI/ML models are like the tags we put on our luggage when we travel – they help machines know what's what. For example, in image recognition, labels will be "cat," "dog," or "elephant" for pictures of animals, so the AI can learn to tell them apart. We can finally tell the difference between a corgi and a loaf of bread!

More examples:

For spam filtering, emails will be labeled as "spam" or "not spam" to train an AI system to identify and filter out unwanted messages.

In sentiment analysis, text data can be labeled as "positive," "negative," or "neutral" to help an AI system understand the emotions behind the words.

Large Language Model (LLM)

A large language model is like that friend who can talk about anything – it's an AI model designed to understand and generate human-like text. LLMs are trained on vast amounts of data, such as books, articles, and the internet, to learn grammar, style, and even some facts.

Example: ChatGPT can draft emails or generate responses in a chatbot, making it feel like you're talking to a real person. It can write paragraphs on a variety of subjects, from explaining scientific concepts to creating fictional stories.

Machine Learning (ML)

Machine learning helped machines learn from experience and without specific instructions by analysing data and identifying patterns. It's like magic, but with math!

Examples:

Netflix uses ML to analyse your viewing habits and recommend shows and movies you might like, turning your Friday night into a binge-watching marathon.

Google Maps uses ML to analyse traffic patterns and suggest the quickest route to your destination, so you can avoid that massive traffic jam (except in Bengaluru, no AI can save us there).

Model Selection

There are a variety of models one can use but not all models fit all problem statements. Based on the performance of different models, the one that fits best is chosen. The process by which this model is chosen is called model selection. It's all about finding the right balance between complexity and accuracy.

Examples:

A data scientist working on a movie recommendation system might compare different ML models to find the one that best predicts user preferences.

In the medical field, researchers might use model selection to choose the most accurate AI model for diagnosing diseases from medical images.

Financial analysts could employ model selection to find the best AI model for predicting stock market trends, maximising returns, and minimising risks.

Natural Language

Natural language is the way humans talk to each other, full of slang, idioms, and that weird thing we do when we say something but mean something else.

In AI and ML, natural language refers to human languages like English, Spanish, or Mandarin, as opposed to programming or coding languages. It's the language of love, laughter, and occasionally, frustration with autocorrectttt.

Example: Voice assistants like Google Assistant or Alexa understand our commands in our natural language and respond in a conversational manner.

Natural Language Generation (or "NLG")

Natural Language Generation, or NLG for short, is the process of generating human-like text or speech.

Examples:

News organisations use NLG to automatically generate articles about financial reports or sports scores, turning dry data into engaging narratives.

Customer service chatbots use NLG to create human-like responses to customer inquiries, making interactions feel more personal and less robotic.

Natural Language Processing

Natural Language Processing helps machines understand, interpret, and respond to human language. NLP combines linguistics, computer science, and artificial intelligence to create systems that can read and understand text or speech.

When you ask Siri to set an alarm for 8 AM tomorrow, Siri uses NLP to understand that you want to set an alarm with properties: date = tomorrow, time = 8 AM, frequency = once. NLP is not just about identification of these properties and commands, but also context and how the sentence is said.

Examples:

Sentiment analysis tools use NLP to determine whether a piece of text, like a product review or a tweet, has a positive, negative, or neutral tone.

AI-powered translators, like Google Translate, use NLP to convert text from one language to another, breaking down language barriers one sentence at a time.

Neural Network

Neural networks (also known as artificial neural networks (ANNs)) are a computer's attempt to replicate the human brain, minus the irrational fears and weird dreams (so far).

Neural networks consist of interconnected nodes or "neurons" that process information and learn from data, allowing the machines to recognise patterns, make decisions, and even "think" in a way that mimics human intelligence. The algorithm learns from experience to adjust its parameters (how much each node matters) and one can experiment with the optimal number of nodes and layers to get the most accurate results.

Examples:

Image recognition systems use neural networks to identify objects in photos.

Self-driving rely on neural networks to process sensor data, make decisions, and navigate complex environments without human intervention.

Optimisation

Optimisation is the process of fine-tuning, tweaking, or adjusting an AI or ML model to make it as accurate and efficient as possible.

Examples:

In the transportation industry, optimisation can help create more efficient routes for delivery vehicles — saving time, fuel, and reducing environmental impact.

Social media platforms use optimisation to improve their content ranking algorithms.

Parameters

Let’s imagine that you are creating an engagement score for your social media app. Engagement is measured by how many likes, comments, and shares people give on a post. However, comments are a higher form of engagement than the other two because sharing means you liked it so much that you want your friends to see it.

If my model is: Engagement score = 1 * likes + 1 * comments + 2 * shares,

Likes, comments, and shares are my attributes and 1,1,2 are my parameters (or weights assigned to each attribute).

Parameters are the variables you model will optimise for and they can be adjusted to improve its performance.

Prompt

Prompts are questions, statements, or instructions given to an AI system to get a specific response or action in return. If AI is your genie, then prompt is how you ask for a wish! Prompts are an important part of how we interact and communicate with AI systems.

Examples:

When you ask Siri, "What's the weather like today?" you're giving it a prompt, and Siri responds by providing you with the current weather conditions.

If you ask a chatbot on a shopping website, "Can you help me find the best deals on running shoes?", the chatbot uses this prompt to search for relevant products.

Prompt Engineering

Prompt Engineering is the art of crafting precise and effective prompts to generate relevant and useful responses. If you ask your genie for a wish but you are not clear enough, you might end up with a bow on your head instead of a bow and arrow.

Question Answering (QA)

Question Answering, or QA, is your very own personal trivia champion – a system designed to answer questions in a natural, human-like manner. QA systems can read and understand text, process user queries, and respond with relevant answers, making them incredibly useful in fields like customer support, education, and research.

Examples:

AI-powered customer service chatbots use QA systems to quickly provide answers to common questions, like shipping times or return policies.

In educational settings, QA systems can help students find answers to their questions, making learning more efficient and engaging.

Researchers can use QA systems to search through large databases of scientific articles.

Sentence: I've been using a Question Answering system to help me study for my history exam, and it's like having a knowledgeable tutor available 24/7 to answer all my questions.

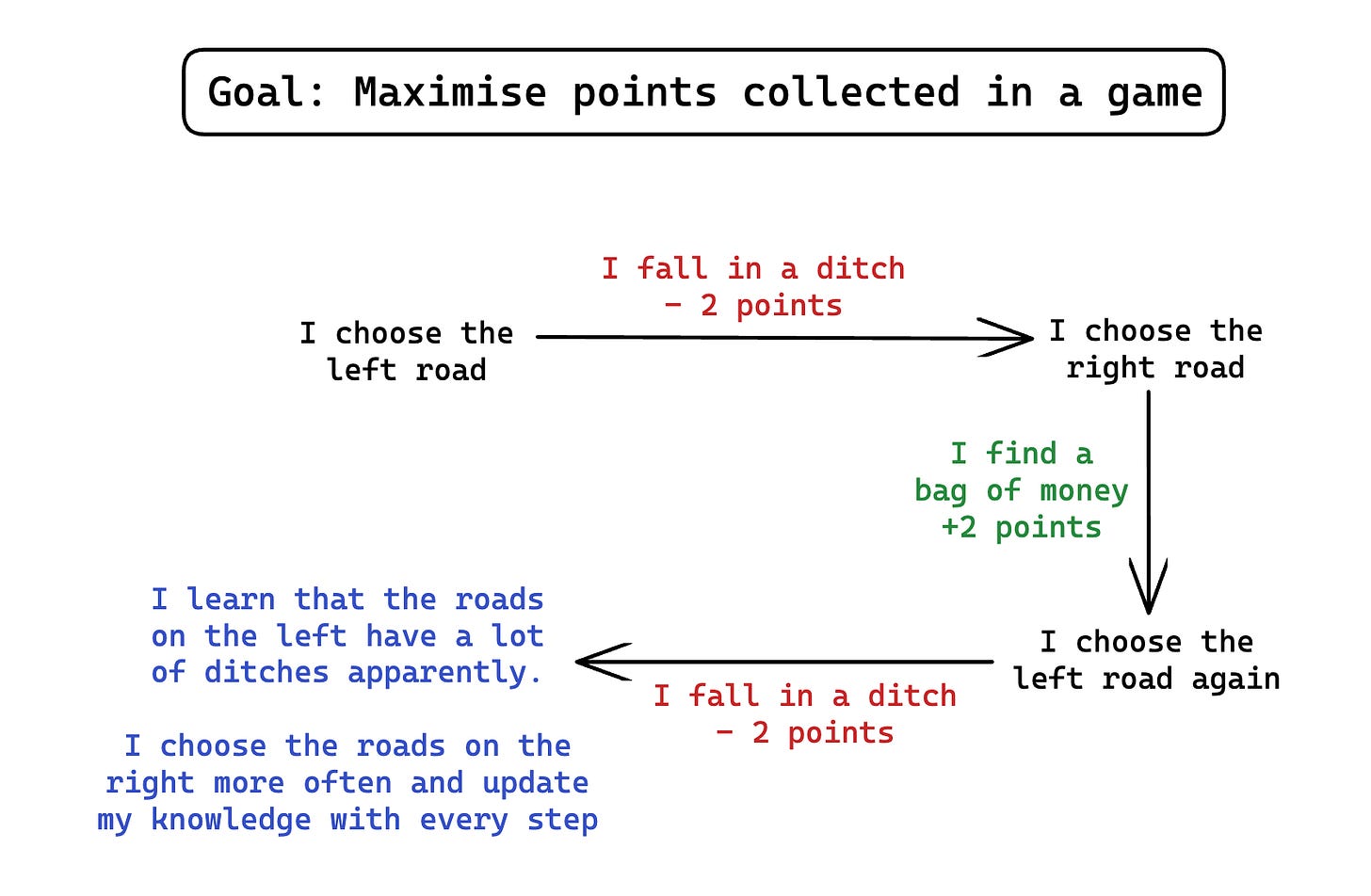

Reinforcement Learning

Imagine teaching a child how to ride a bike. You cheer them on when they pedal in the right way and gently correct them when they wobble. This is reinforcement learning in the real world!

With reinforcement learning, machines from their mistakes. Using trial and error, and receiving positive or negative feedback for their actions — they figure out the best strategies and behaviours to complete a task or solve a problem.

In very simple terms: A positive feedback could be a positive score and a negative feedback could be a negative score. The machine will have to optimise for the highest positive score. See the image below for a simple example.

Examples:

An AI-powered investment system could use reinforcement learning to experiment with different trading strategies, finding the most profitable approach over time.

In robotics, reinforcement learning can help robots learn how to walk, balance, and interact with their environment by trying different actions and learning from their mistakes.

Reinforcement Learning from Human Feedback (RLHF)

Remember Reinforcement Learning? At a high level, think of RLHF as using that concept but with the help of humans who judge whether the model did well or not.

Say, you are teaching a student how to write a poem. Whenever you give them a topic, they write the poem and come back to you for feedback. You rate the poems and give them points. They learn based on your grading — identifying which techniques fetch them the highest point!

In RLHF, humans check and give feedback on the output of the model and the model then learns what works and what doesn’t, improving over time.

Example: ChatGPT was trained using RLHF.

Response

Response is the AI’s output to your command, question, or message.. Responses can be multi-modal (text, speech, or even actions).

Examples:

When you ask Siri for the weather, the “response” is a spoken forecast that tells you what to expect that day.

An AI-powered travel planning app could respond to your request for hotel suggestions with a list of options, complete with prices and ratings based on your requirements.

Robotics

Robotics is the branch of science and engineering that deals with creating robots. These robots can be physical machines or virtual tools using AI / ML to learn and perform tasks.

Examples:

In manufacturing, robotics is used to automate assembly lines, producing products more quickly and with fewer errors.

Vacuum cleaners, like the Roomba, use AI and robotics to keep your floors clean and dust-free without you having to lift a finger.

Speech Recognition

Speech recognition is the technology that allows machines to understand and convert spoken words into text. Learning the nuances of human speech, speech recognition makes it possible for us to talk to our gadgets and AIs.

Examples:

Alexa and Siri use speech recognition to understand your spoken commands and answer your questions.

Google Voice Typing allows you to dictate messages or notes, converting your speech into written text.

Speech Synthesis

Speech synthesis helps machines generate human-like speech. AI / ML systems use speech synthesis to convert text into spoken words, letting our devices to talk back to us in a natural way.

Examples:

Text-to-speech apps such as Speechify use speech synthesis to read articles or books out loud.

GPS navigation systems such as Google Maps use speech synthesis to give you turn-by-turn directions.

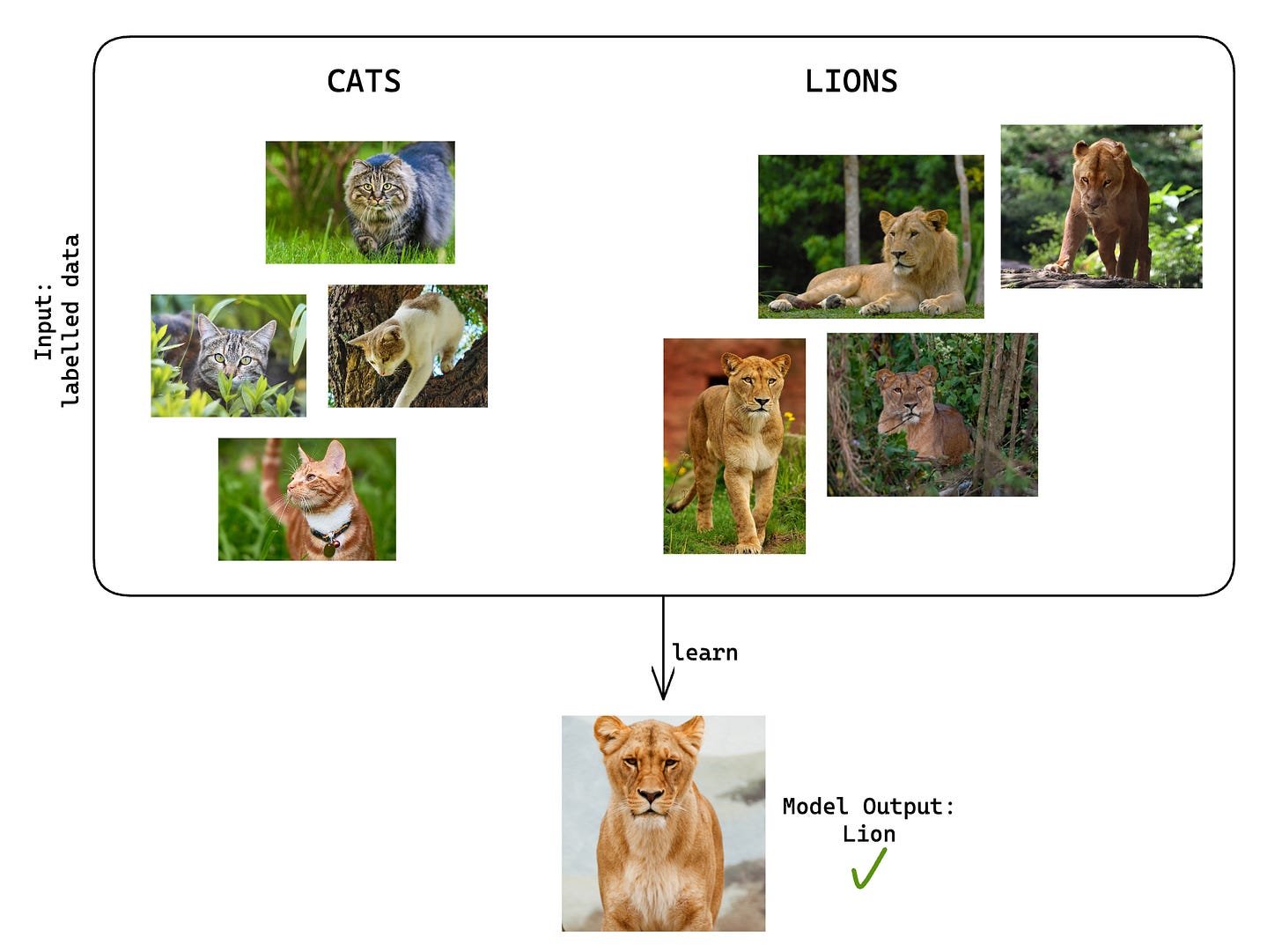

Supervised and Unsupervised Learning

Supervised learning is the approach in which a machine learns from labeled examples. We provide the model all the inputs and the correct output for each input combination, and let it find the relationship between those to make predictions on new, unseen data. See the image below for an example.

Applications and drawbacks: Supervised learning has a lot of real-world applications such as making a prediction or classifying things into categories. Since supervised learning needs a large amount of data to learn on, the labelling can get quite tedious. In the above example, imagine tagging 10,000 images manually.

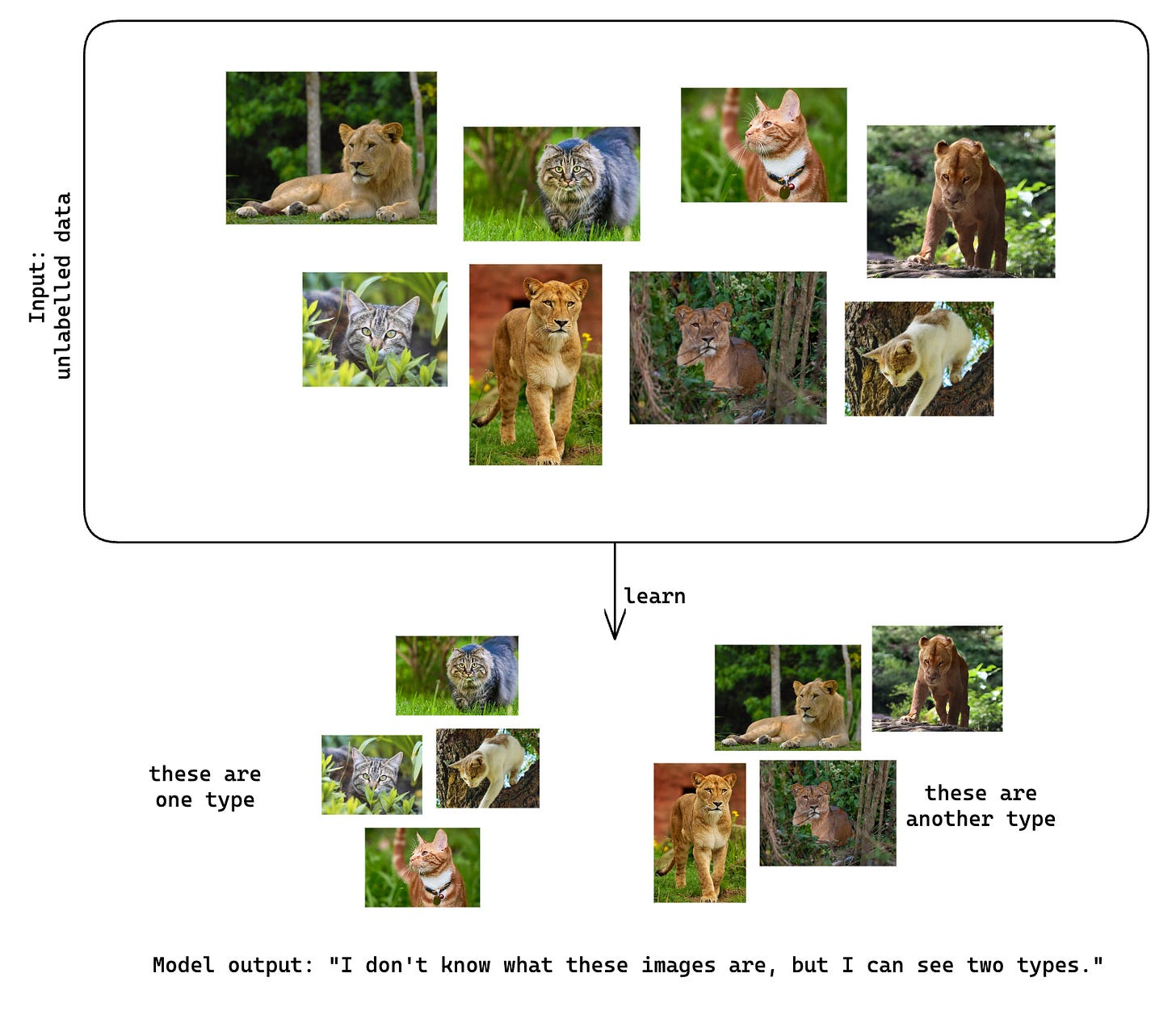

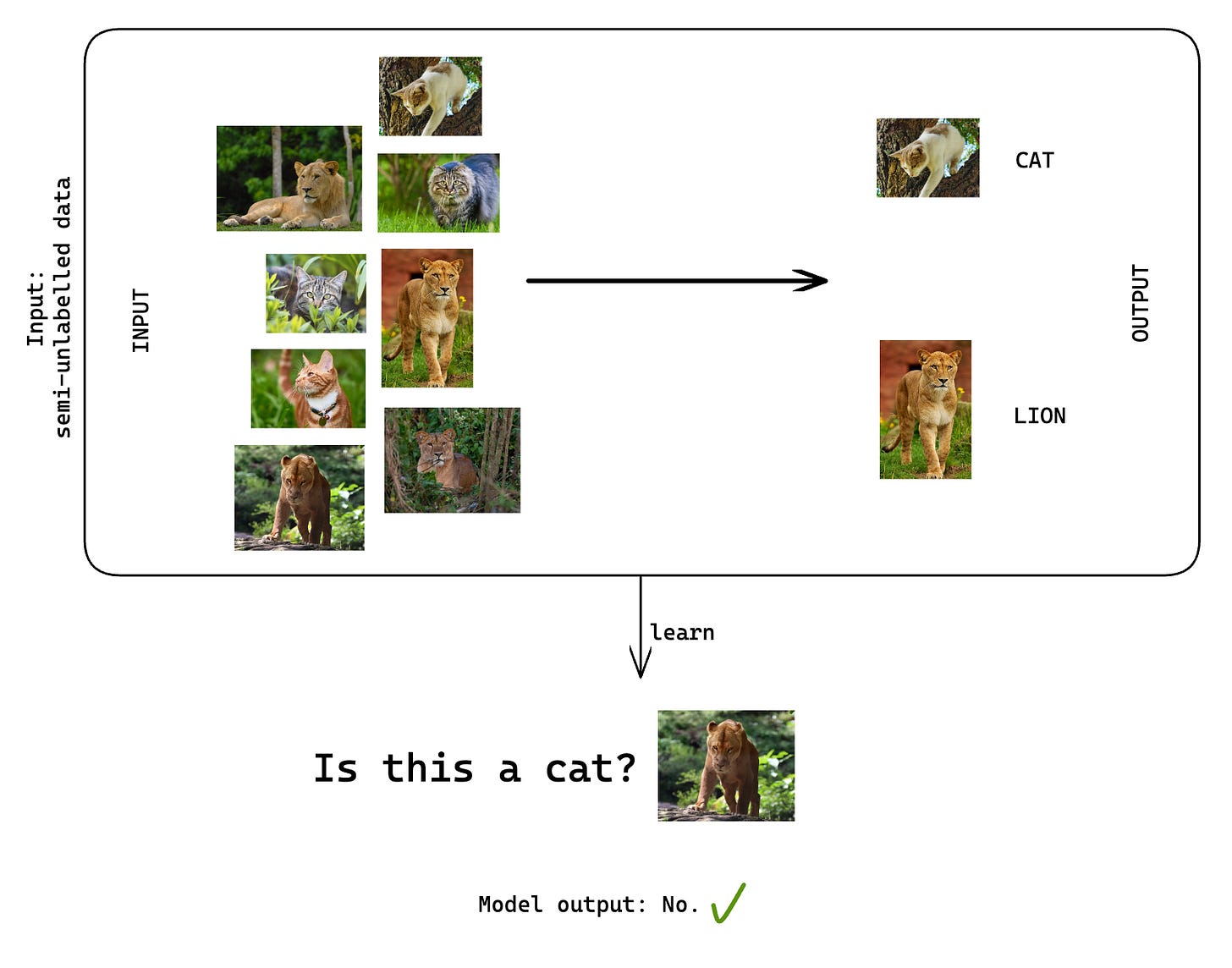

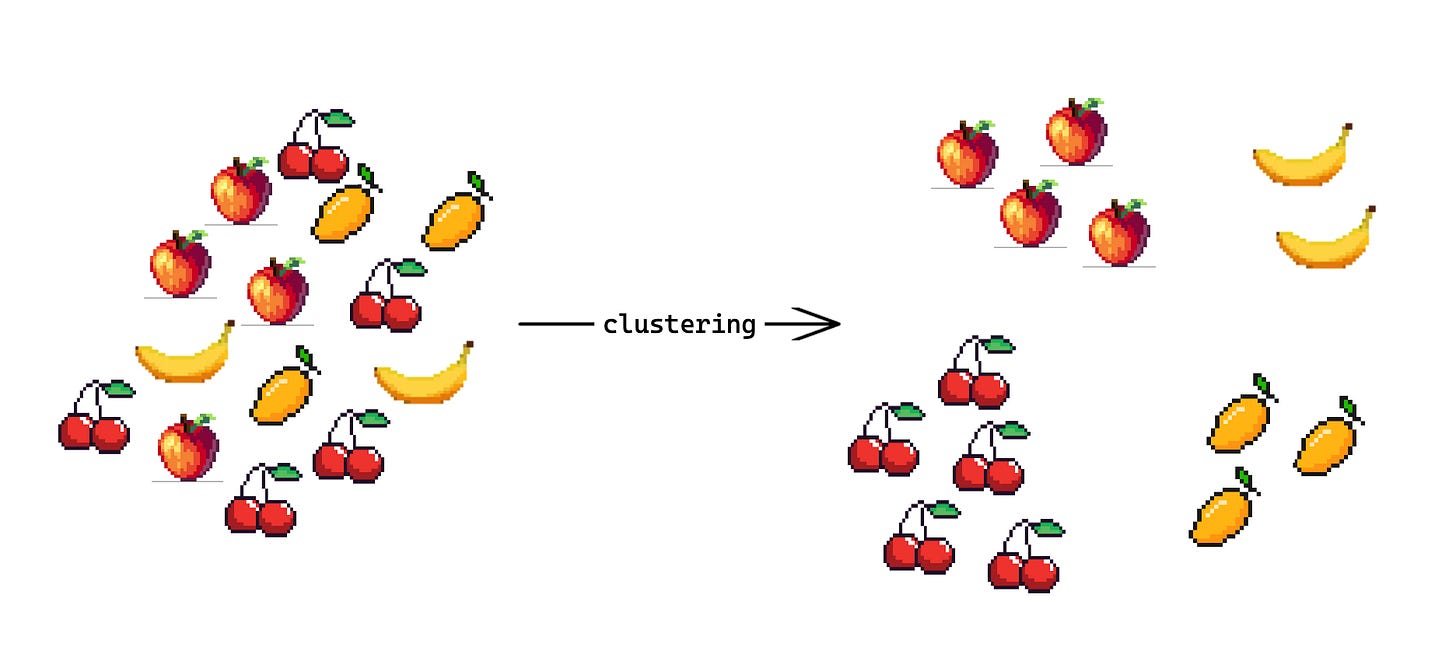

Unsupervised learning is the approach in which a machine learns from unlabeled examples, finding patterns on its own. See the image below for an example.

Applications and drawbacks: While there is a lot of unlabelled data to use, the applications of these methods are limited. Anomaly detection is one of the key applications of unsupervised learning, along with clustering (finding groups)

Examples:

In email spam filters, supervised learning can be used to teach AI systems which messages are spam and which are legitimate, based on labeled examples of both types of emails.

Social media platforms use unsupervised learning to identify and group similar content for users.

Semi-Supervised Learning

It should be clear by the name itself (unlike machines, humans are quite intuitive), it is a combination of the two techniques above. The image below explains it best:

Self-supervised learning

A fairly advanced topic to cover in a glossary, self-supervised learning takes us one step closer to making machines “learn” like humans do.

Imagine that you are visiting a country where only language X is spoken and you do not know X. You do not have a translator or a phone. The first few days will be difficult, but soon you will start seeing patterns. People will always greet you with a certain word or phrases. When you go to the grocery store, they will ask you for change using certain words, and so on. Slowly, after talking in signs for a bit, you will start catching the words and making sense of them — at least enough to get by in the day to day.

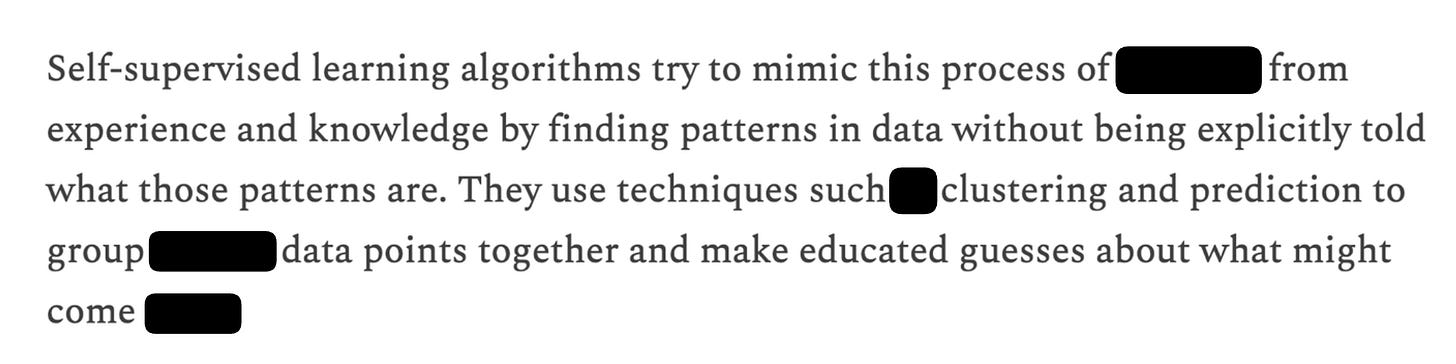

Before reading further, see if you can fill in the blanks in the following image":

How did you know that “as” should follow “such” when talking about examples? You have seen these patterns and usage over and over again so you know how it works. Here it is:

Self-supervised learning algorithms try to mimic this process of learning from experience and knowledge by finding patterns in data without being explicitly told what those patterns are. They use techniques such as clustering and prediction to group similar data points together and make educated guesses about what might come next.

Example:

GPT-3 is trained using self-supervised learning. Based on a very large dataset including articles, books, webpages, and other sources, it predicts what is the most likely next word or sentence that should come next.

Text-to-Speech

Exactly as expected, Text-to-Speech or TTS is a technology that converts text into spoken words and sentences.

Examples:

Audiobooks generated with the help of TTS models.

Your phone’s in-built text-to-speech that you can use when you turn on accessibility features.

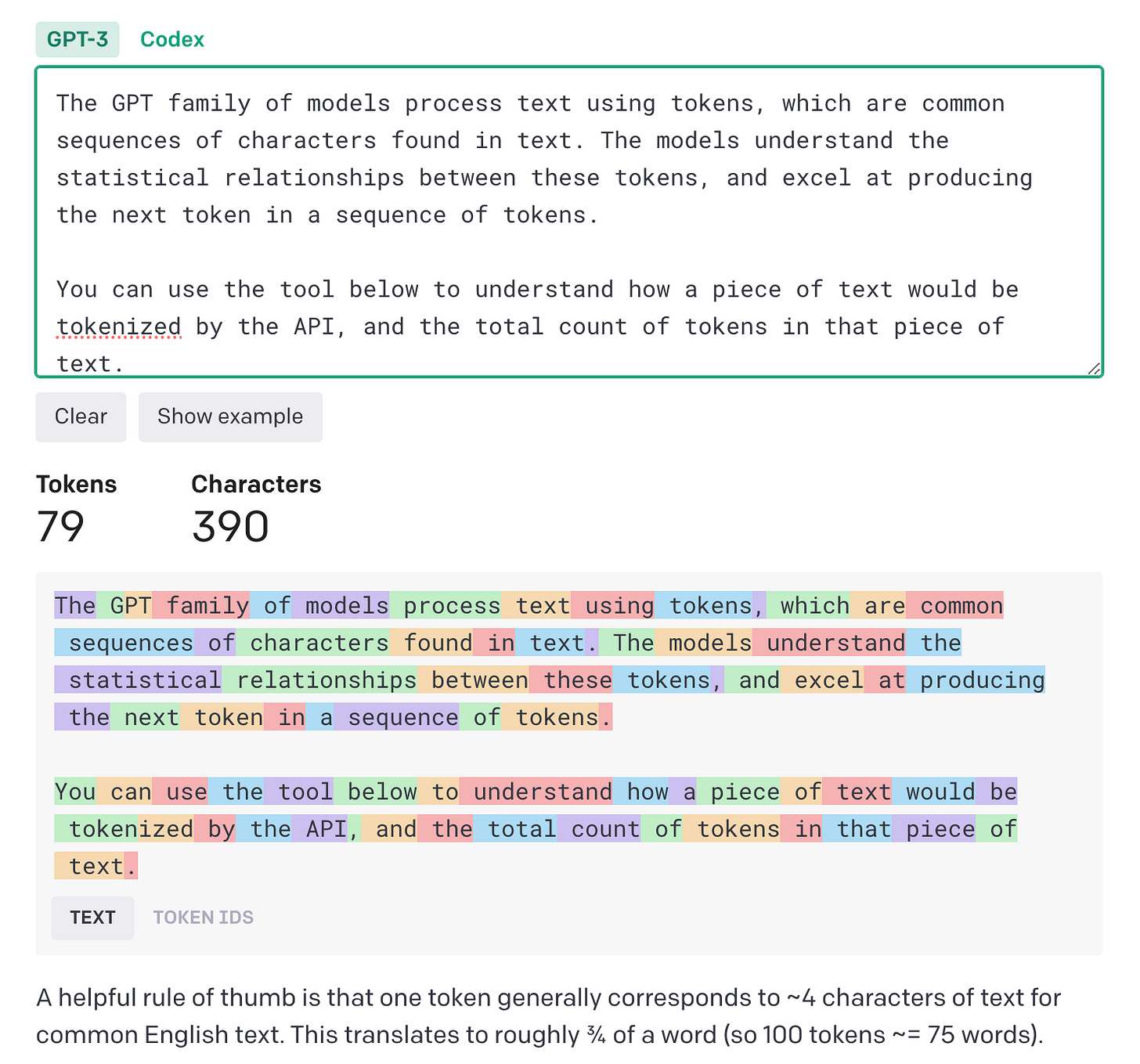

Tokenisation

Remember solving a puzzle like the one in the image below? The big picture of the puzzle would be broken down into several smaller pieces that fit in together to create the whole. Let’s call each small piece a “token”.

Tokenisation in the context of text is similar. It is the process of splitting a sentence, paragraph, document, or other forms of text smaller units called “token”. Depending on how the tokenisation is done, tokens could be individual characters or syllables or something else.

Examples:

Search engines use tokenisation to break down your search query into tokens and then matching them to relevant content.

When using OpenAI's GPT model to generate text, the number of tokens in the input and output can affect the processing time and the cost of using the service.

Training (AI/ML)

If you have ever owned a pet, you understand “training” better than most. In the context of AI/ML models, training is the process of teaching a machine how to do a specific task by feeding it a ton of data.

Examples:

1. Spam filters in your email are trained to identify and separate spam from your important emails.

2. Spotify trains its recommendation engine to suggest music and podcasts that you'll likely enjoy.

Turing Test

The Turing Test is a test designed to determine if a machine can exhibit intelligent behaviour that is indistinguishable from that of a human. Imagine having a conversation with someone behind a curtain, and if you can't tell whether it's a person or a machine, then the machine passes the test.

The definition of the ultimate Turing Teat may evolve. There are many discussions on whether and how we should update the Turing Test and when a machine will actually pass it. As you can guess, declaring that you think you are talking to a machine can become subjective very quickly if the test is not designed properly.

Movie recommendation: “Ex Machina” — one of the best films exploring the Turing Test and human-AI interactions.

Variance

Variance is the sensitivity of a model to small fluctuations in the training data.

Suppose you trained a model to identify different breeds of dogs. Now, if you trained the model in such a way that it gives the most accurate answer on all training images, then it may fumble when you give it a slightly different picture later as a test image. This is the case of high variance.

If you train the model in such a way that it understands different images well, then you will get a low variance. However, this would mean that it can have a high bias.

Example: A stock market prediction model with high variance may overfit the training data, leading to poor performance in predicting actual stock prices and potentially causing you to lose money.

Watch this great video on the bias-variance trade-off:

☀️ Applications

In this section, we will look at some common applications of AI in the real-world.

Anomaly Detection

Finding strange or unusual patterns in data that shouldn't be there.

In the banking industry, anomaly detection helps identify unusual transactions (such as a bank transfer from a new location or a larger than usual sum of money being spent), catching sneaky fraudsters before they empty your bank account.

In the manufacturing sector, it can monitor the production line and spot faults (such as a toy with four eyes instead of two) in products early.

Autonomous Vehicles

Vehicles designed to drive themselves without a human driver, navigating roads, traffic rules, and obstacles. Think: self-driving cars.

In logistics, self-driving trucks can be used for long-haul deliveries without a truck driver having to spend days on the road.

In agriculture, autonomous tractors and harvesters can help farmers tend to their crops.

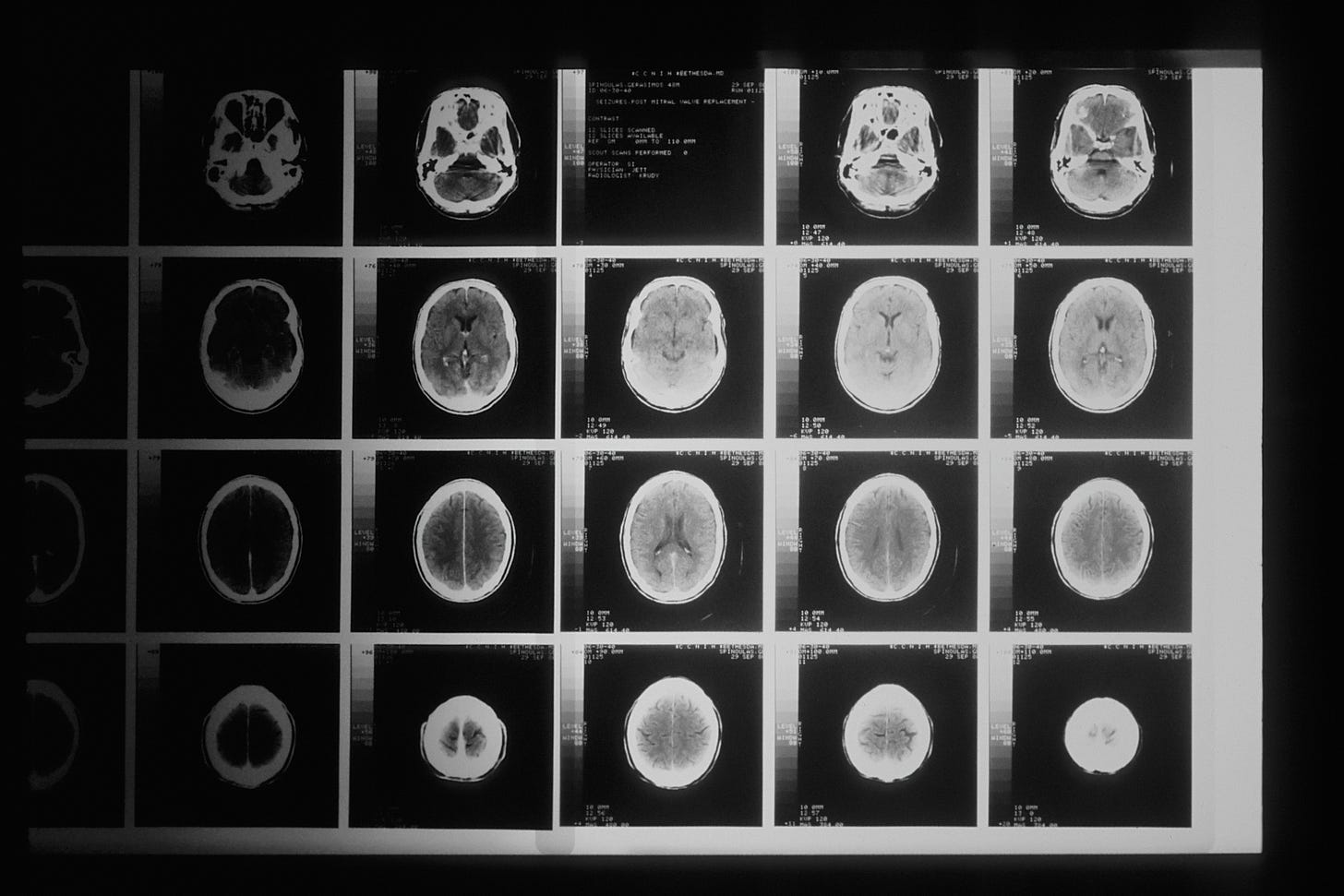

Computer-Assisted Diagnosis

Helps doctors diagnose diseases by analysing medical data and images, providing a second opinion that can save lives and prevent misdiagnoses.

In radiology, computer-assisted diagnosis helps doctors spot tiny tumours in medical images that even the most eagle-eyed professionals might miss.

In cardiology, it can analyse heart signals, identifying abnormalities to detect the beginning of a heart attack.

Drug Discovery

Finding and creating new drugs and medications by sifting through tons of data, making predictions, and suggesting potential compounds.

Example: In cancer research, AI-driven drug discovery can help scientists find new treatments in a shorter amount of time for a lower cost.

Emotion Recognition

Analysing facial expressions (by looking at your face), speech patterns (by hearing your voice), and even body language to figure out your emotional state, a mind-reading machine!

In customer service, emotion recognition can help AI voice assistants understand your frustration level and increasing the compassion in response.

In mental health, emotion recognition can help therapists and counselors track their clients' emotional well-being.

In the automotive industry, it can be used to monitor drivers' emotions, detecting drowsiness or stress and alert them to ensure a safe ride.

Fraud Detection

Identifying suspicious patterns, transactions, or activities that could indicate fraud, protecting your hard-earned money.

In credit card transactions, fraud detection can alert you about unusual spending patterns.

In insurance claims, it can help spot fraudulent claims.

In online marketplaces, fraud detection can identify fake reviews.

Image Classification

Categorises and labels images based on their content, recognising objects, people, and even scenes.

Image classification in photo gallery apps helps organise and filter your photos.

In wildlife conservation, it can help identify and track endangered species on the camera.

Image Recognition

Identifying specific objects, people, or places within an image.

Example: In security systems, image recognition can identify unauthorised individuals.

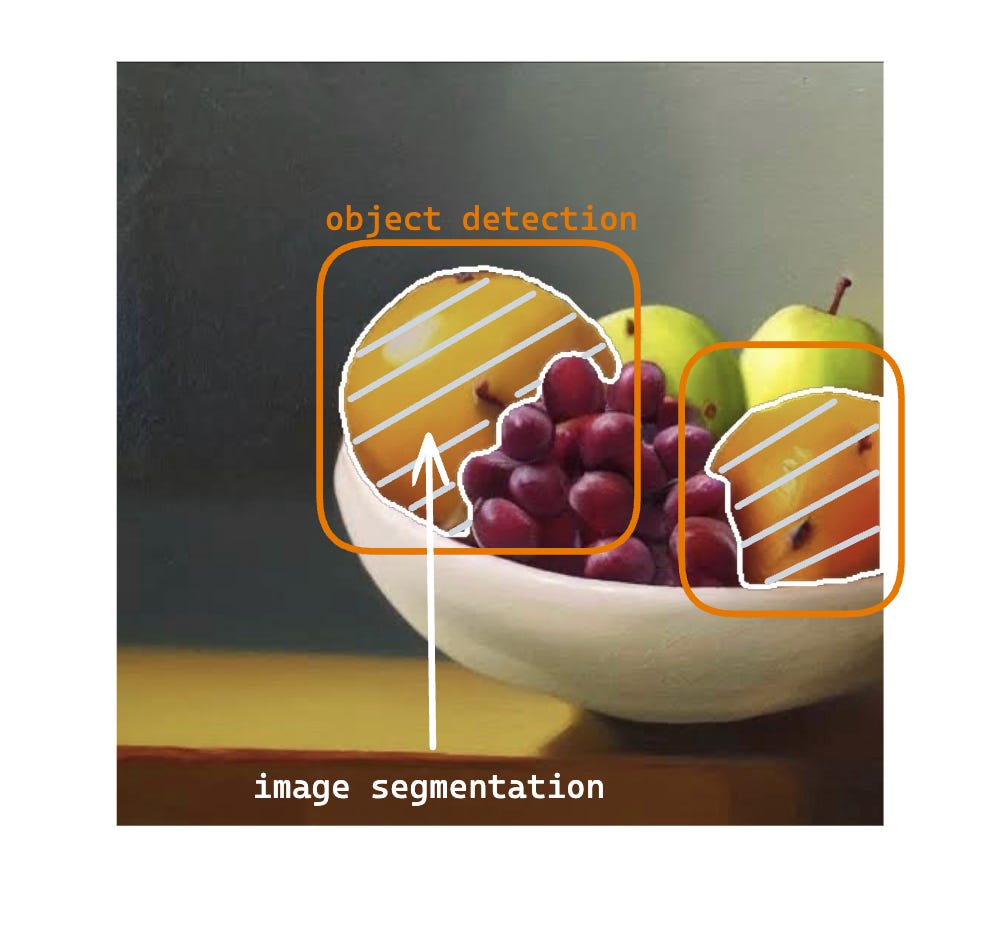

Image Segmentation

Separating objects, people, or areas within a picture, making it easier for computers to understand and process complex scenes. Think of it as digital scissors that can cut out the important bits from a photo.

Detailed medical images can be created by identifying different parts in a picture or a scan.

Self-driving cars can identify pedestrians, other vehicles, and traffic signs.

Intelligent Personal Assistants

Assistants understand and respond to voice commands, and can be trained to perform general tasks or more specific ones.

In smart homes, IPAs can control your lights, thermostat, and appliances.

In travel planning, IPAs can help you book flights, hotels, and rental cars.

In productivity, IPAs can manage your calendar, set reminders, and send messages and emails.

Medical Image Analysis

Extracting useful information from medical images such as an anomaly, development of a tumour, or change in the shape of an organ beyond usual limits.

In orthopaedics, it can analyse X-rays or bone scans, pointing our fractures.

In prenatal care, medical image analysis can assess ultrasound images to monitor the health and development of unborn babies.

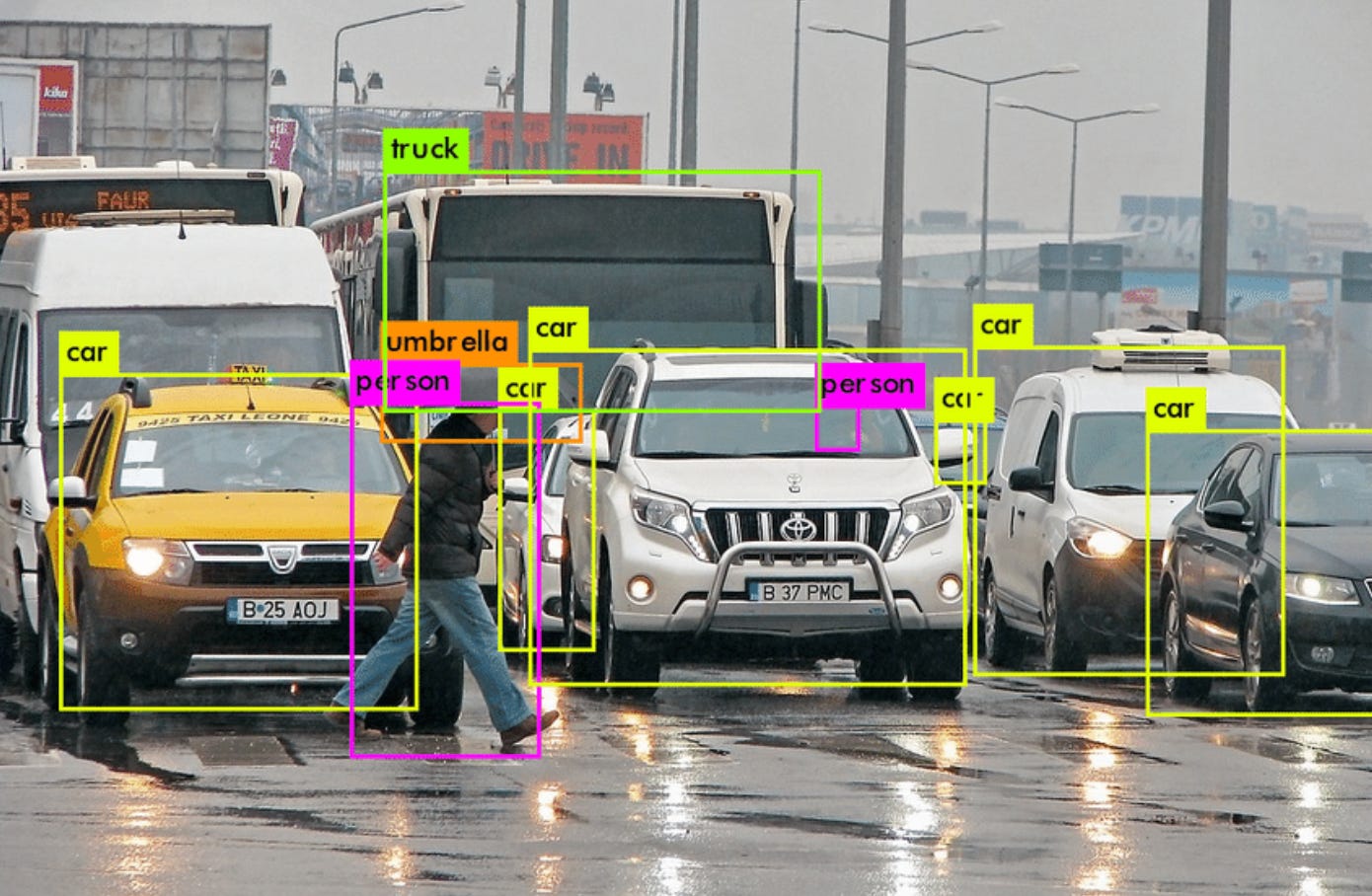

Object Detection

Locating and recognising different objects.

Wait, how is it different from Image Segmentation?

At this point, you must be torn between using “image segmentation” or “object detection” or something else. Image segmentation is an advanced form of object detection which separates the object in the image from the rest of the image. Take this visual example:

Recommendation Systems

Recommendation Systems analyse your behaviour, interests, and past choices, along with those of your friends, to offer personalised recommendations.

In online shopping, recommendation systems suggest products based on your browsing history.

In entertainment, they recommend movies, TV shows, or music tailored to your preferences.

Sentiment Analysis

Examining words and phrases, and determining whether they convey positive, negative, or neutral feelings.

In social media monitoring, it can track public opinion about a brand or topic, helping businesses react and adapt to the “mood” of the internet.

In political campaigns, sentiment analysis can assess voter opinions.

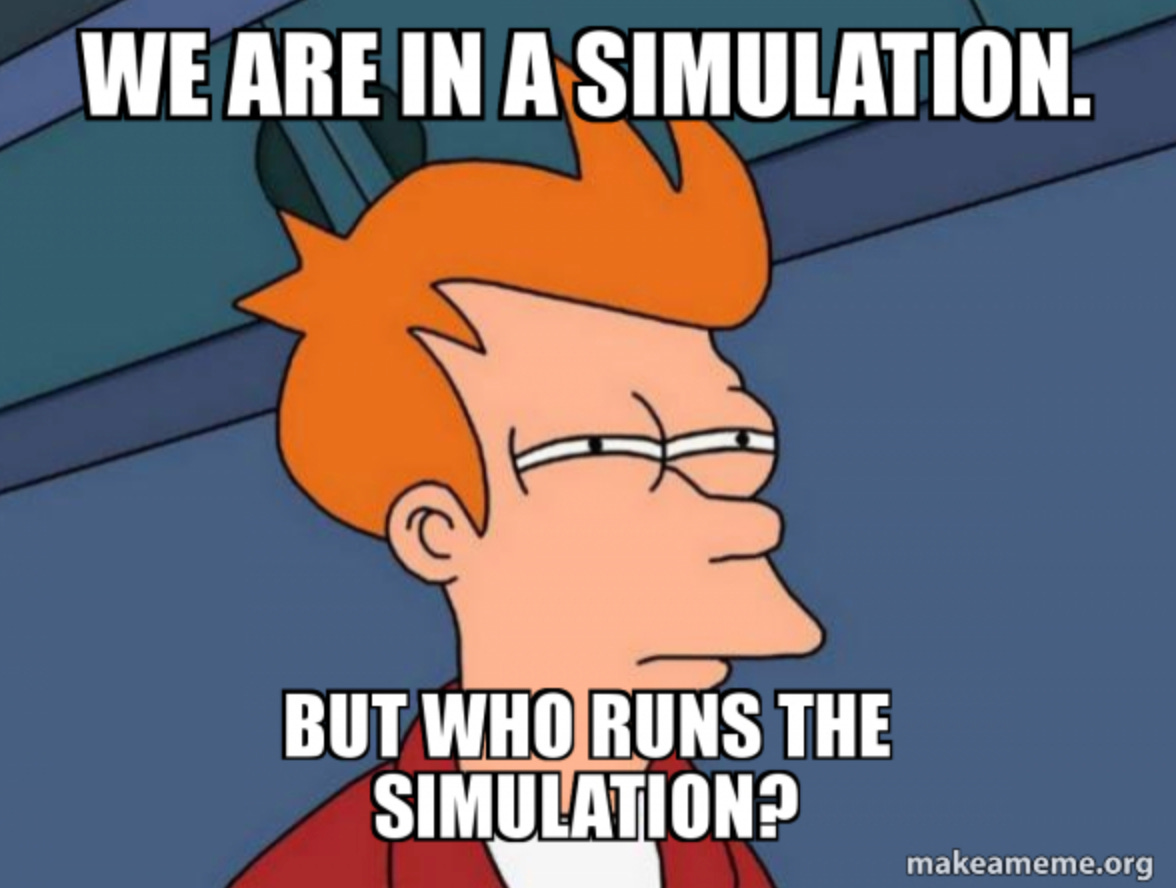

Simulation

Simulation is a digital sandbox where you can play around with different scenarios and test ideas without any real-world consequences. Virtual models of systems or processes can be used to predict outcomes, identify problems, and optimise performance.

Simulations help engineers test safety gear inside cars and plan cities based on traffic patterns and projections.

Smart Home Devices

Automated household appliances, lighting, and security systems that can take commands or work independently.

Smart lighting systems can be programmed to adjust brightness or turn on and off at specific times, making your home energy-efficient.

Smart security devices, like doorbells and cameras, can monitor your home and alert you to any suspicious activity.

☀️ Tools & Companies (most popular only)

AlphaGo

AlphaGo is a computer program that uses machine learning (ML) to play the board game "Go". Go has been around for thousands of years and is known for its complexity - call it chess on steroids. It is really hard for computers to win.

The program was created by Google's DeepMind and it made headlines in 2016 when it beat one of the best human Go players in the world, arguably making it the strongest Go player in history.

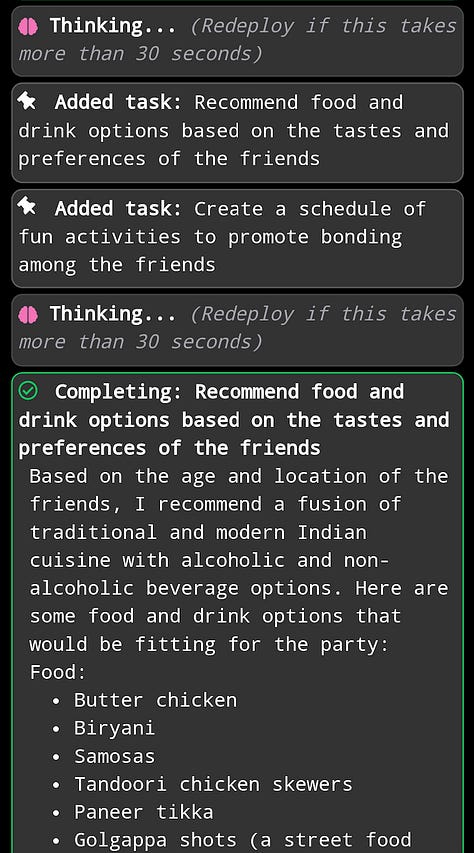

AutoGPT

AutoGPT was released as an open-source project on Github.. With access to the internet and GPT-4, AutoGPT is capable of breaking the goal down into specific tasks and then solving each step on its own (you don’t have to describe each step, hence the “auto”).

Let’s take an example: You have to write a review of the latest research on seasonal flu.

Goal: Write a literature review of the latest (everything after 2020) research on seasonal flu and its effects on human health. Include all sources you use.

AutoGPT: Defines tasks that it will need to carry out on its own. Tasks: 1. Get all research papers after 2020 on seasonal flu 2. Read them and find patterns and the strongest point of view emerging from them 3. Compile this into a literature review document.

Then, AutoGPT goes ahead and does all tasks (which may have subtasks!) for you and gives you a complete document.

Chats

Conversational AI chats enable users to interact with AI in a natural manner using language they use in real life. With access to the internet, these AIs can provide more powerful responses to queries. They can also be used for creative tasks such as generating ideas and creating first drafts.

Bard AI

Bing Chat

ChatGPT

Ernie Bot

DeepMind

Google’s Artificial Intelligence research lab.

Popular tools

Copilot (Github)

Copilot is an AI helper for programmers. Users can interact with copilot in natural language and receive suggestions on their (code) work.

Duolingo Max and Khanmigo

Educational AIs (or AI tutors) that resolve doubts and provide personalised help.

Microsoft 365 Copilot

Microsoft Copilot is an AI assistant and productivity tools that can be used across Microsoft’s apps and accomplish tasks such as replying to an email, analysing data, generating reports, creating the first draft of a document, and generate ideas.

Generative AI for Images

AIs that generate images for a given prompt:

Midjourney

Dall-E

Stable Diffusion

Adobe Firefly

Applications: Generating creative images, characters, graphics, designs, and so on.

OpenAI

The artificial intelligence research lab that built for GPT, ChatGPT, Dall.E.

☀️ Human Interactions & Society

Anthropomorphism

Attributing human-like characteristics to non-human entities such as animals, objects, or even artificial intelligence.

Imagine your favourite cartoon character that isn't human, but talks, thinks, and behaves like one – that's anthropomorphism in action. Another example is talking to assistants such as Alexa / Siri and feeling like you are talking to a person.

Anthropomorphism makes AI more relatable and easy to use.

Ethics in AI

The moral principles guiding the development and use of artificial intelligence.

It's the rulebook for AI — we need to have some guidelines in place if this technology is going to change our world.

A good example would be an AI system that screens job applicants. If it isn't programmed with ethical considerations, it might unintentionally favour certain candidates over others based on factors like gender or race, which will lead to unfair hiring practices. Ethical guidelines ensure that does not happen.

Responsible AI

Developing AI systems that are transparent, accountable, and beneficial to society.

You could say it's about raising well-behaved AI "children" that know their limits and respect their human "parents."

For instance, an AI-powered health app that diagnoses illnesses should be transparent about its accuracy and limitations. If it's not responsible, it could give you an incorrect diagnosis, making you believe you have the deadliest flu on the planet when you've actually just got a minor cold.

Technological singularity

A hypothetical point in the future when technology becomes so advanced that it changes the course of human existence and society in an irreversible manner.

Sounds intense, doesn’t it? In the context of AI, this could happen when AI starts rapidly improving itself without human intervention, far surpassing our intelligence levels, leading to unpredictable changes in the human world. For example, an AI developing an even more advanced AI, and so on.

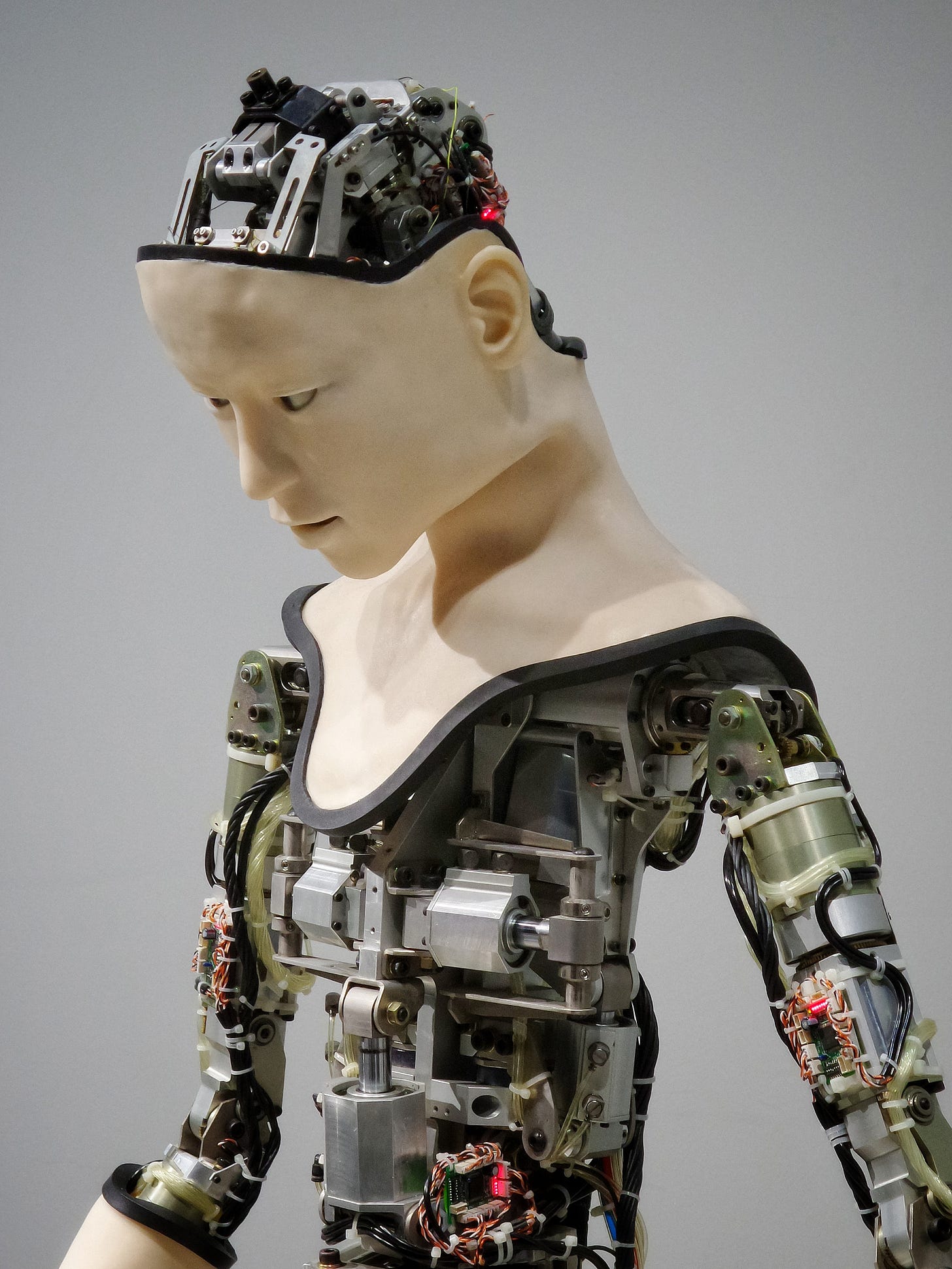

Uncanny valley

The eerie feeling we get when something looks and acts “almost” human, but not quite.

Remember those animated Barbie movies, or wax figures of your favourite celebrities? It's kind of realistic, but something is off, making them look creepy and unsettling.

With rapid developments in AI, uncanny valley may not be limited to visuals. We may experience an uncanny valley of the “mind” — machines that think like humans, almost.

🔬 AI/ML Concepts

There are some terms that you will hear repeatedly if you go deeper into how AI/ML models work, their strengths, and shortcomings:

Accuracy

How good a computer program is at making correct predictions or solving problems.If you give a test to a computer program, accuracy would be the percentage of questions it gets correct.

Example: You teach a computer program what an apple looks like by showing it pictures of apples. After this, you give it a new set of 100 pictures of fruits, including some apples. If it correctly identifies 95 out of the 100 fruits (apple or not apple), then its accuracy is 95%.

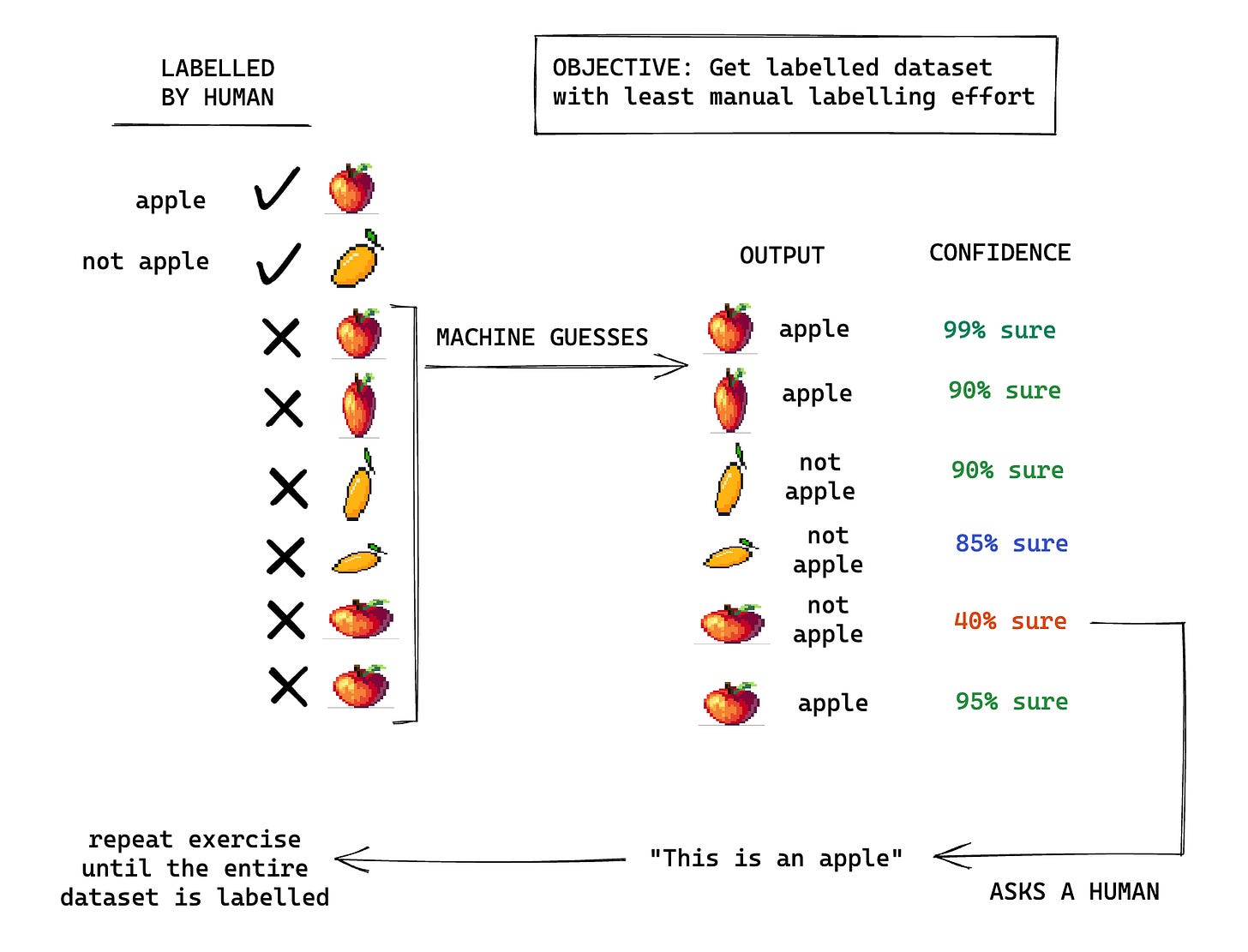

Active Learning

Models can interactively ask a human for help to learn better and reduce manual effort.Active learning is best explained using an example. Imagine you have a training dataset with 100,000 images of fruits. If you had to label each of them manually, it will take you a LONG time. What if you could manually label, say, 10% of the dataset and let the machine label the rest, helping the machine occasionally whenever it was stuck? This is called “active” learning by the machine because it interacts with you to get information. The chart below shows how this works.

Algorithm

A step-by-step process / set of rules that a computer program follows to solve a problem or make decisionsExample: Let's say you have to arrange the numbers in a list in ascending order. Here is what an algorithm might do:

Start with an given list of numbers and find the smallest number in the list.

Switch the smallest number with the first number in the list.

Now, start from the second number (first place is already smallest) onwards and again repeat steps 1 & 2 until the whole list is done.

This algorithm is called “Selection Sort”, not that you need to know that!

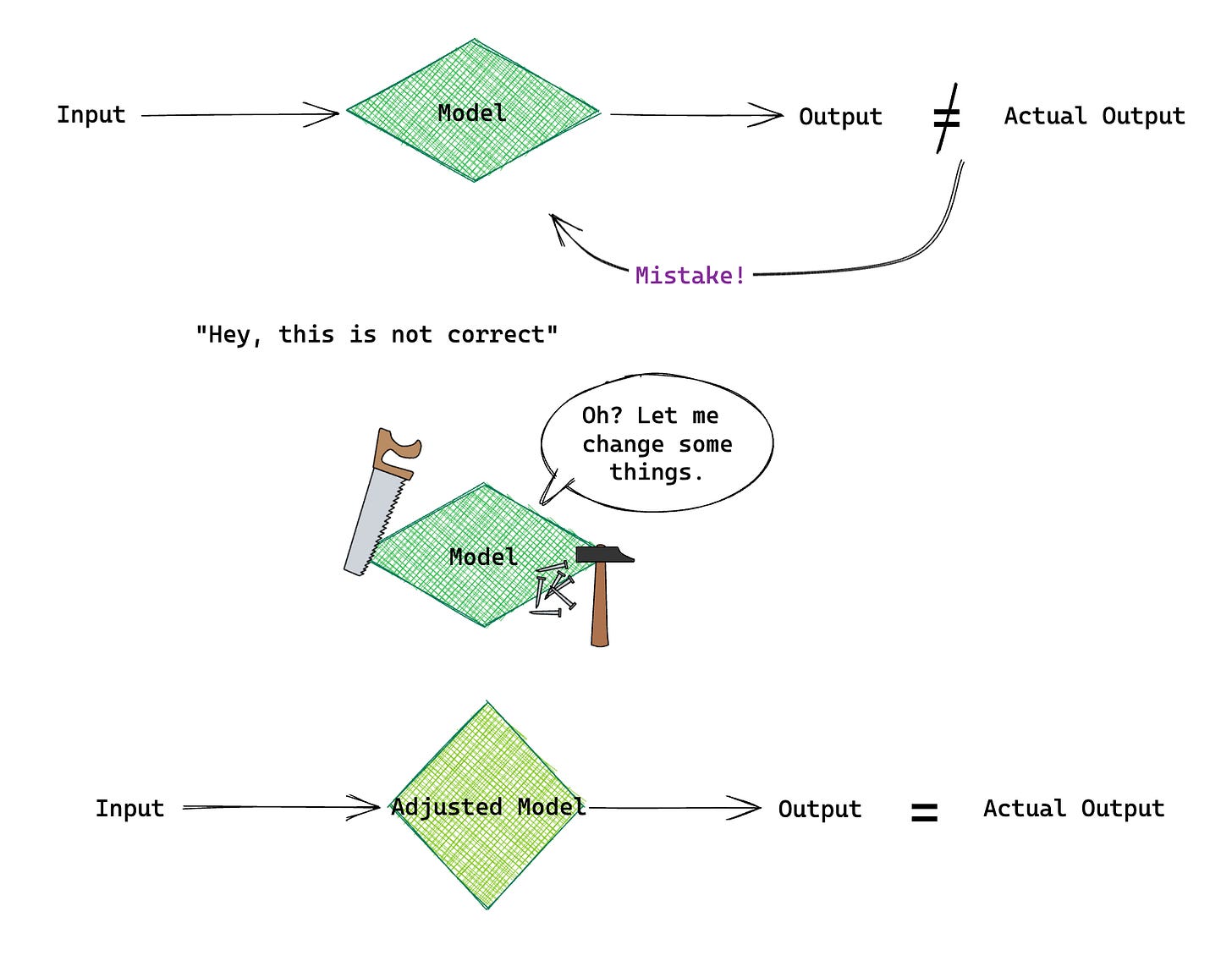

Backpropagation

Models can learn from mistakes to adjust their parameters (or internal settings). In backpropagation, a model improves itself by comparing how far it is from the actual output for given inputs.Backpropagation is a key technique to improve the performance of Neural Networks. See the diagram below to understand how it works.

Example: Imagine a group of students solving math problems together. After finishing a problem, they check their answers against the correct answer. If they get it wrong, they go back to see where they made a mistake and try to learn from it, so they don't make the same error again.

Clustering

Grouping similar things together.If you had to perform “clustering” on a set of fruits, you would create groups of similar fruits. For example, you may club all the apples together, bananas together, and so on. Alternatively, you may club all the fruits with seeds together and those without seeds separately.

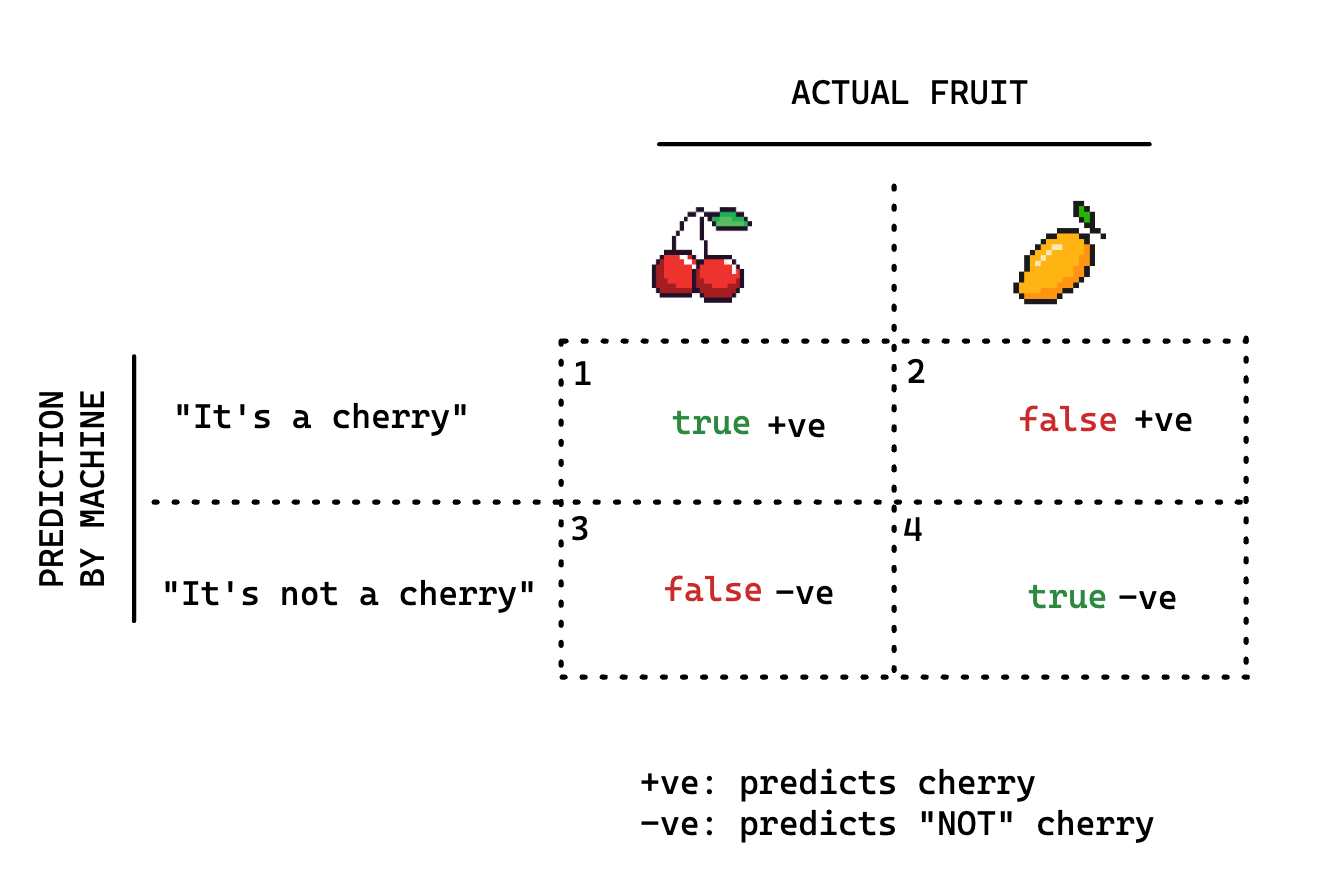

Confusion Matrix

Measures how the model performed in classification of data.Take the following example where the model’s task is to predict whether an image is that of a cherry or not:

All key metrics for model performance can be calculated based on the confusion matrix. Here are some important metrics:

Accuracy

How often the model is correct. From the confusion matrix in the image above,

Precision

How often the model is right when it predicts positive values. In our example above, it refers to how many times the model correctly identifies cherries, out of all the times it predicts that a fruit is a cherry.

Recall

How often the model predicts positive values correctly of all the times it is given positive values. In our example above, it refers to how often the model predicts cherries correctly, out of every time a cherry appears.

F1-score

As you can see, precision and recall tackle two different types of prediction performances but they look so similar that I have no doubt why the confusion matrix is called a “confusion” matrix.

Accuracy, on the other hand, does not capture the nuance of how often the model is actually able to understand what is a cherry and what isn’t.

F-1 score combines both Recall and Precision to give us a more nuanced metric than accuracy.

When is F-1 score better than Accuracy?

Consider a case where you have to predict whether a tumour is cancerous or not. False negatives are quite scary in this case. Your overall accuracy may be high but if you fail to identify cancerous tumours, it may cost a life. In such cases, accuracy only tells you how the model is doing “overall”.

Decision Trees

A sequence of decisions to classify data points.Decision trees are best explained with diagrams. Look at the following algorithm (on the right) that identifies whether the data point is a red circle or a green one, based on a sequence of questions about where they lie on the chart (on the left).

Feature Extraction

With feature selection (as we discussed earlier), we choose relevant features for the model. Feature extraction takes it one step further.

When selected features can be mapped to a smaller set of features (extraction), we reduce the risk of the model getting confused with too many features.Here’s an example: Let’s say that you want to describe your friend to someone who has never met them so that they can identify your friend in a group. You can select physical features such as hair colour, eye colour, height, how tall they look in a group compared to others, speaking speed, and so on.

Notice how height and how tall they look in a group compared to others may be related? What if we combined them into one feature? That will be feature extraction.

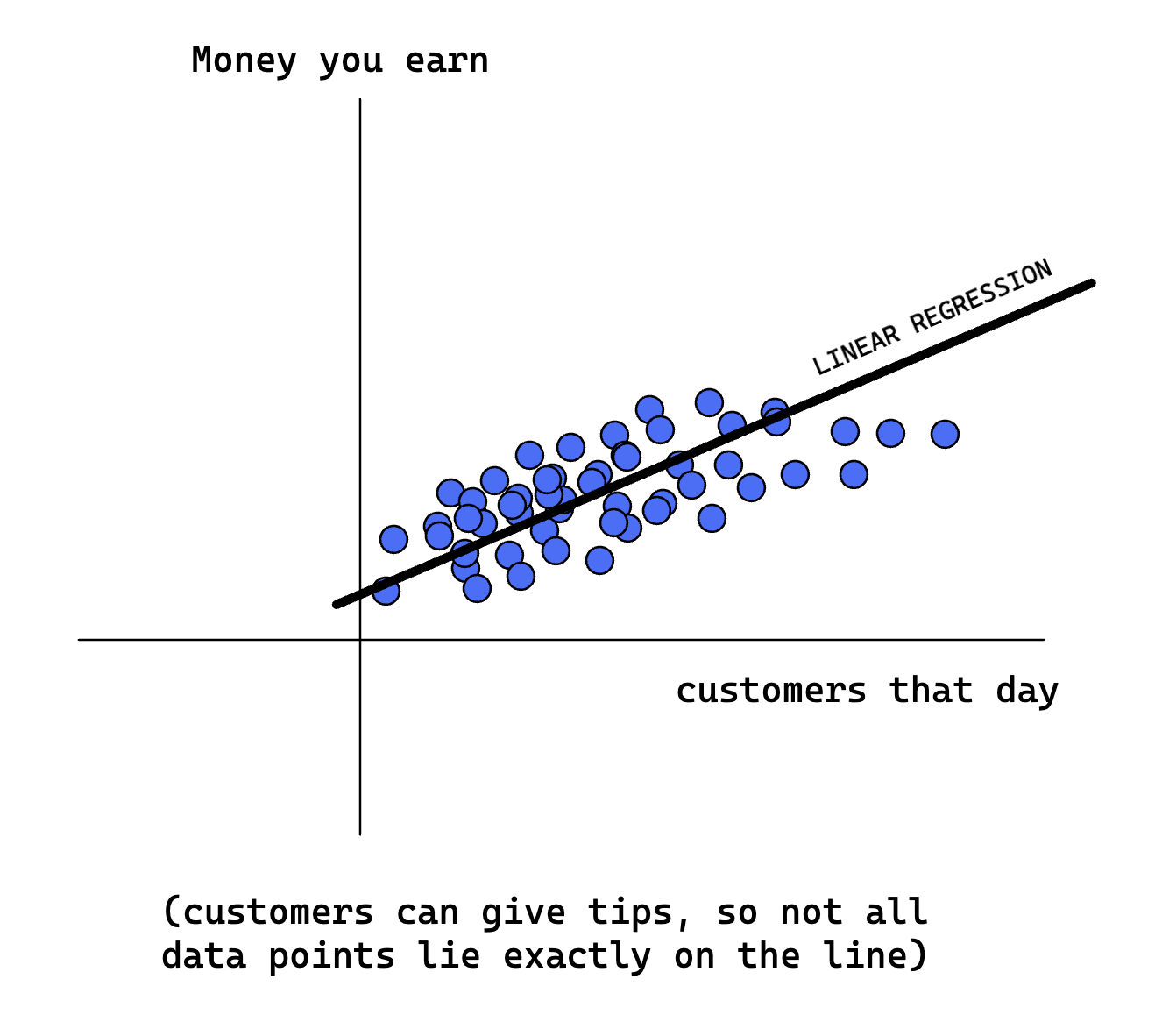

Linear Regression

Finding a linear relationship between two variables to understand how they are related and predict how they will change with respect to each other.Imagine you're trying to predict how much money you'll earn from your lemonade stand. When you have more customers, you earn more money. By observing this relationship, you can estimate your earnings based on the expected number of customers, say, tomorrow.

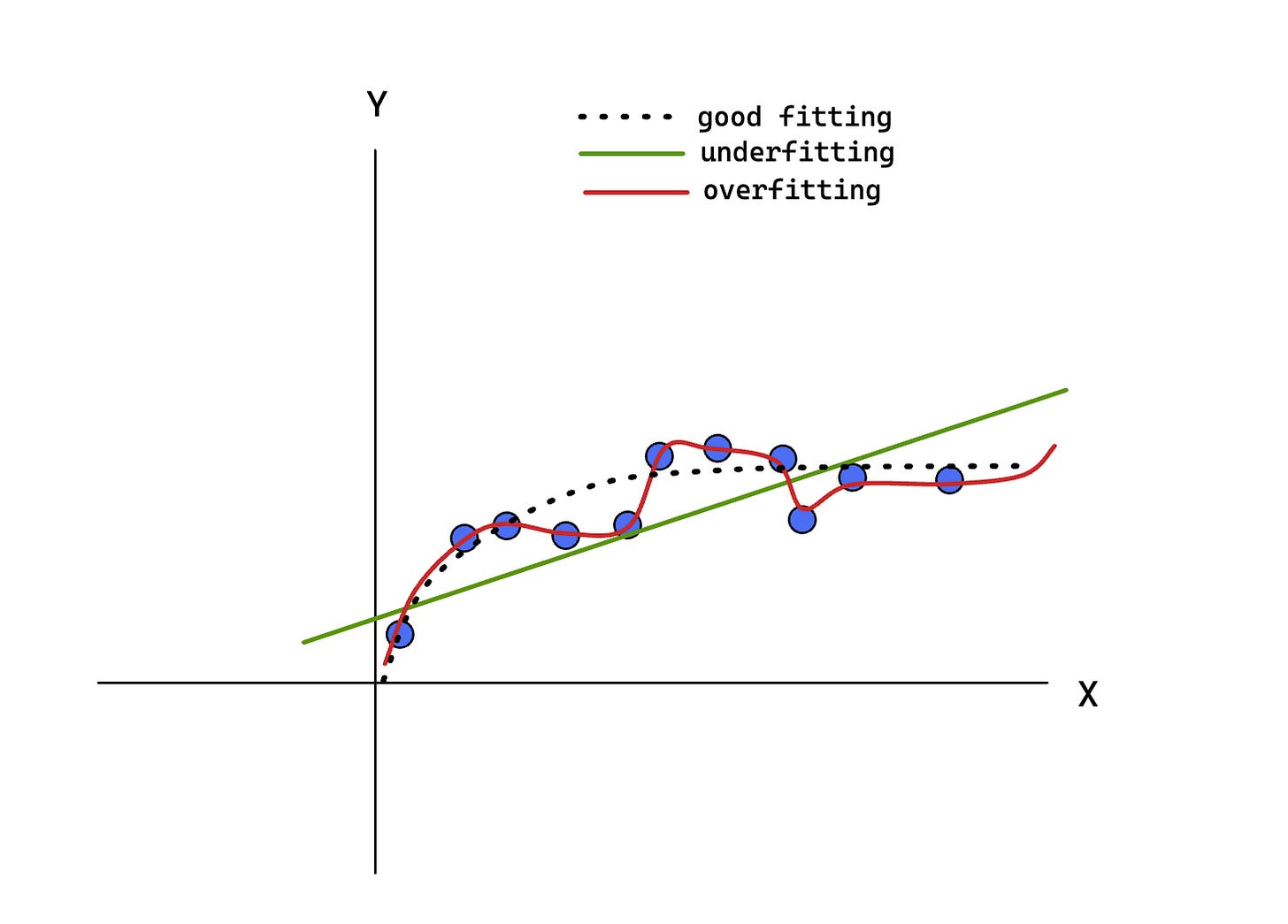

Overfitting and underfitting

When a model fits the data so perfectly that it tried to solve for every single outlier, it overfits. When a model is lazy and does not even work well for data points in a similar range, it underfits.Here is a visual example:

With this, the glossary comes to an end. Phew. I bet you can now confidently hold a conversation about AI with most people!